Making every researcher seek grants is a broken model

Author: Jason Crawford

Jason Crawford is the founder & president of Roots of Progress. This essay was originally published on The Roots of Progress blog (1/26/24).

When Galileo wanted to study the heavens through his telescope, he got money from those legendary patrons of the Renaissance, the Medici. To win their favor, when he discovered the moons of Jupiter, he named them the Medicean Stars. Other scientists and inventors offered flashy gifts, such as Cornelis Drebbel’s perpetuum mobile (a sort of astronomical clock) given to King James, who made Drebbel court engineer in return. The other way to do research in those days was to be independently wealthy: the Victorian model of the gentleman scientist.

Eventually we decided that requiring researchers to seek wealthy patrons or have independent means was not the best way to do science. Today, researchers, in their role as “principal investigators” (PIs), apply to science funders for grants. In the US, the NIH spends nearly $48B annually, and the NSF over $11B, mainly to give such grants. Compared to the Renaissance, it is a rational, objective, democratic system.

However, I have come to believe that this principal investigator model is deeply broken and needs to be replaced.

That was the thought at the top of my mind coming out of a working group on “Accelerating Science” hosted by the Santa Fe Institute a few months ago. (The thoughts in this essay were inspired by many of the participants, but I take responsibility for any opinions expressed here. My thinking on this was also influenced by a talk given by James Phillips at a previous metascience conference. My own talk at the workshop was written up here earlier.)

What should we do instead of the PI model? Funding should go in a single block to a relatively large research organization of, say, hundreds of scientists. This is how some of the most effective, transformative labs in the world have been organized, from Bell Labs to the MRC Laboratory of Molecular Biology. It has been referred to as the “block funding” model.

Here’s why I think this model works:

Specialization

A principal investigator has to play multiple roles. They have to do science (researcher), recruit and manage grad students or research assistants (manager), maintain a lab budget (administrator), and write grants (fundraiser). These are different roles, and not everyone has the skill or inclination to do them all. The university model adds teaching, a fifth role.

The block organization allows for specialization: researchers can focus on research, managers can manage, and one leader can fundraise for the whole org. This allows each person to do what they are best at and enjoy, and it frees researchers from spending 30–50% of their time writing grants, as is typical for PIs.

I suspect it also creates more of an opportunity for leadership in research. Research leadership involves having a vision for an area to explore that will be highly fruitful—semiconductors, molecular biology, etc.—and then recruiting talent and resources to the cause. This seems more effective when done at the block level.

Side note: the distinction I’m talking about here, between block funding and PI funding, doesn’t say anything about where the funding comes from or how those decisions are made. But today, researchers are often asked to serve on committees that evaluate grants. Making funding decisions is yet another role we add to researchers, and one that also deserves to be its own specialty (especially since having researchers evaluate their own competitors sets up an inherent conflict of interest).

Research freedom and time horizons

There’s nothing inherent to the PI grant model that dictates the size of the grant, the scope of activities it covers, the length of time it is for, or the degree of freedom it allows the researcher. But in practice, PI funding has evolved toward small grants for incremental work, with little freedom for the researcher to change their plans or strategy.

I suspect the block funding model naturally lends itself to larger grants for longer time periods that are more at the vision level. When you’re funding a whole department, you’re funding a mission and placing trust in the leadership of the organization.

Also, breakthroughs are unpredictable, but the more people you have working on things, the more regularly they will happen. A lab can justify itself more easily with regular achievements. In this way one person’s accomplishment provides cover to those who are still toiling away.

Who evaluates researchers

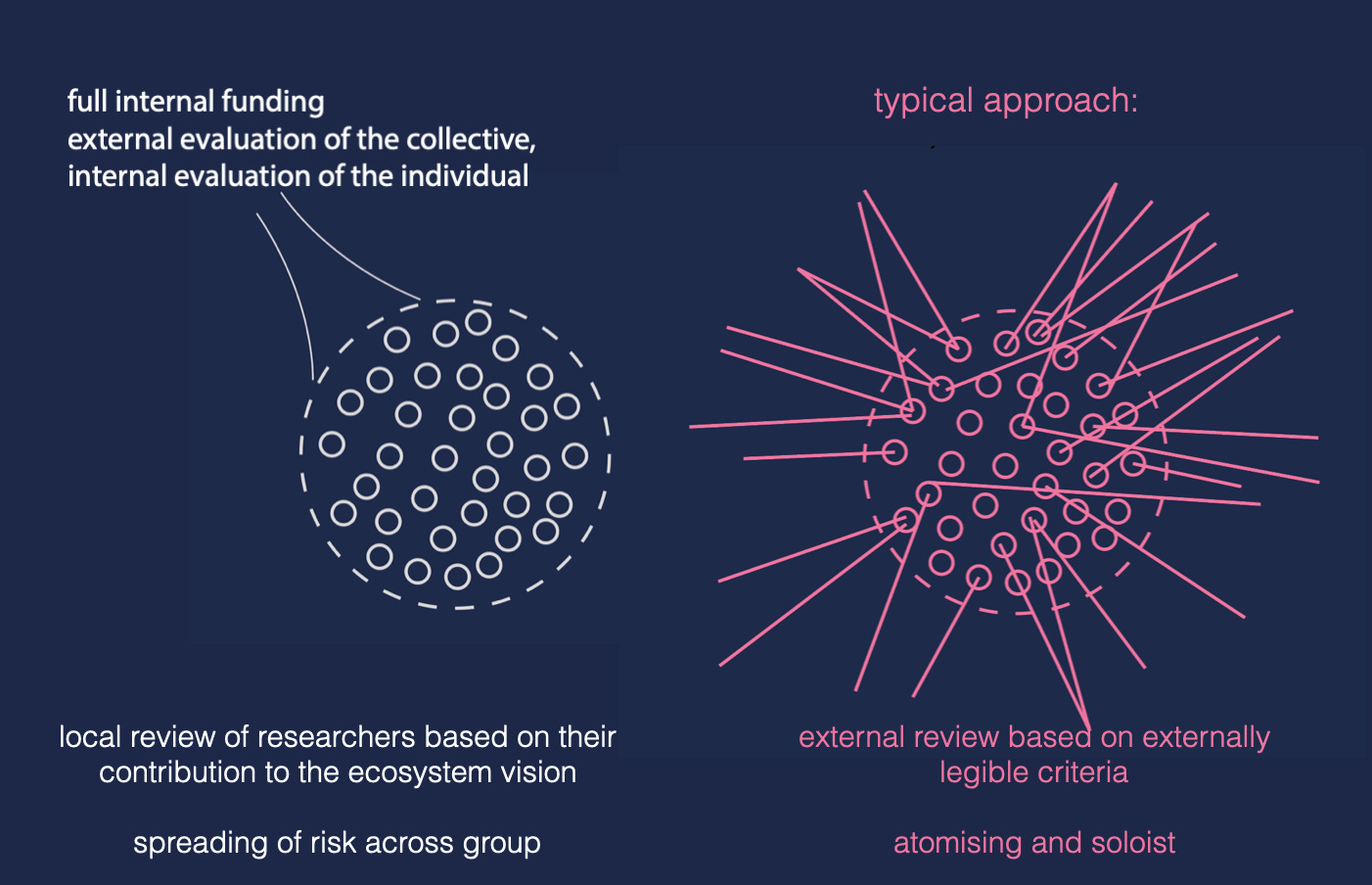

In the PI model, grant applications are evaluated by funding agencies: in effect, each researcher is evaluated by the external world. In the block model, a researcher is evaluated by their manager and their peers. James Phillips illustrates with a diagram:

A manager who knows the researcher well, who has been following their work closely, and who talks to them about it regularly, can simply make better judgments about who is doing good work and whose programs have potential. (And again, developing good judgment about researchers and their potential is a specialized role—see point 1).

Further, when a researcher is evaluated impersonally by an external agency, they need to write up their work formally, which adds overhead to the process. They need to explain and justify their plans, which leads to more conservative proposals. They need to show outcomes regularly, which leads to more incremental work. And funding will disproportionately flow to people who are good at fundraising (which, again, deserves to be a specialized role).

To get scientific breakthroughs, we want to allow talented, dedicated people to pursue hunches for long periods of time. This means we need to trust the process, long before we see the outcome. Several participants in the workshop echoed this theme of trust. Trust like that is much stronger when based on a working relationship, rather than simply on a grant proposal.

If the block model is a superior alternative, how do we move towards it? I don’t have a blueprint. I doubt that existing labs will transform themselves into this model. But funders could signal their interest in funding labs like this, and new labs could be created or proposed on this model and seek such funding. I think the first step is spreading this idea.

PS (Jan 31): After publishing this, Michael Nielsen (who has thought about and researched this much more than I have) argues that I have oversimplified and made the case too starkly:

Lots of people make thoughtful proposals for the “correct” approach to funding. They argue that funding scheme A is better than B, or vice versa. This is rhetorically appealing. But I think it’s a mistake. What we need—as Kanjun Qiu and I argue in “A Vision of Metascience”—is a much more diverse set of funding strategies. The right question isn’t “which approach is best” but rather: what mechanisms are we using to adjust the overall portfolio of strategies?

Read his whole thread. Maybe it would be better to say that the PI model is overused today, and block funding is underused.

Please consider supporting our efforts at supporting independent research with a paid subscription or a one-time donation. Help Seeds (of Science) sprout this Spring with a 25% discount for the month of March.

ICYMI: Announcing the SoS Research Collective. The Collective is now active—check out the home page and our first crop (heh) of research fellows!

Interesting. Could this be applied to grant-giving organizations in the arts and humanities as well as the sciences?

So I was thinking recently about review articles, and I was wondering if there was a way to invert the entire process. Right now, we have a pool of money for funding, researchers apply for funding to write papers, and then experts look at the state of the art and periodically write reviews. Wouldn't it be nice if the state of the art was a living document which provided the structure for individual research? After all, nowadays we have Wikis and collaborative editing tools.

The same sort of data structure could probably work for any topic, assuming you have the correct organization and filters. So for example, longevity research, one view could be a picture of the entire human body and the user should be able to zoom in on different sections, such as the heart or kidney. Or you could change the view to ascend the conceptual ladder of genomic/protein/cellular/organ systems, and then the user might filter only for sections looking for drugs that target mTOR. You could zoom into rapamycin that way, or you could reach the same result from a view which is a list of all promising drug candidates sorted by lifespan increase in male mice. At any level of granularity, the result would have an infinitely scrolling list of articles, algorithmically sorted according to some criteria such as age-weighted citation count, beside an autogenerated summary of the state of the art (complete with links), and suggestions on views for conceptually adjacent topics. And open questions.

You start soliciting researchers to start adding open questions to different keywords as well, which other researchers can upvote. Then you go to funders and ask them to start attaching bounties to these questions, for registered reports, raw data, data analysis, and review, as well as retroactive funding.

Done right, it could become THE journal, with the whole ecosystem: funding, research, communication, with each one being done better than what we currently have.