Adam Green is the founder of Markov Bio, a startup building interpretable biological simulators to accelerate drug discovery.

Note: this essay was originally published in August, 2022. See this footnote1 for Claude’s assessment of how the predictions of this future history are holding up 2.5 years later (TLDR: “Remarkably prescient”).

The apparent rate of biomedical progress has never been greater.

On the research front, every day more data are collected, more papers published, and more biological mechanisms revealed.

On the clinical front, the pace is also rapid: the FDA is approving more novel therapeutics than ever before, buoyed by a record number of biologics approvals. A flurry of new therapeutic modalities—gene therapies, cell therapies, RNA vaccines, xenogeneic organ transplants—are slated to solve many hard-to-crack diseases over the coming decade.

It seems we are finally on the cusp of ending biomedical stagnation.

Unfortunately, this account isn’t quite correct. Though basic biological research has accelerated, this hasn’t yet translated into commensurate acceleration of medical progress. Despite a few indisputable medical advances, we are, on the whole, still living in an age of bio-medical stagnation.

That said, I’ll make a contrarian case for definite optimism: progress in basic biology research tools has created the potential for accelerating medical progress; however, this potential will not be realized unless we fundamentally rethink our approach to biomedical research. Doing so will require discarding the reductionist, human-legibility-centric research ethos underlying current biomedical research, which has generated the remarkable basic biology progress we have seen, in favor of a purely control-centric ethos based on machine learning. Equipped with this new research ethos, we will realize that control of biological systems obeys empirical scaling laws and is bottlenecked by biocompute. These insights point the way toward accelerating biomedical progress.

Outline

The first half of the essay is descriptive:

1. The Biomedical Problem Setting and Tool Review will outline the biomedical problem setting and provide a whirlwind tour of progress in experimental tools—the most notable progress in biomedical science over the past two decades.

2. The End (of Biomedical Stagnation) Is Nigh will touch on some of the evidence for biomedical stagnation.

3. The Spectrum of Biomedical Research Ethoses will recast the biomedical control problem as a dynamics modeling problem. We’ll then step back and consider the spectrum of research ethoses this problem can be approached from, drawing on three examples from the history of machine learning.

The second half of the essay is more prescriptive, looking toward the future of biomedical progress and how to hasten it:

4. The Scaling Hypothesis of Biomedical Dynamics will explain the scaling hypothesis of biomedical dynamics and why it is correct in the long-run.

5. Biocompute Bottleneck will explain why biocomputational capacity is the primary bottleneck to biomedical progress over the next few decades. We’ll then briefly outline how to build better biocomputers.

6. The Future of Biomedical Research will sketch what the near- and long-term future of biomedical research might look like, and the role non-human agents will play in it.

Niche, Purpose, and Presentation

There are many meta-science articles on why ideas are (or are not) getting harder to find, new organizational and funding models, market failures in science, reproducibility and data-sharing, bureaucracy, and the NIH. These are all intellectually stimulating, and many have spawned promising real-world experiments that will hopefully increase the rate of scientific progress.

This essay, on the other hand, is more applied macro-science than meta-science: an attempt to present a totalizing, object-level theory of an entire macro-field. It is a swinging-for-the-fences, wild idea—the sort of idea that seems to be in relatively short supply. (Consider this a call for similarly sweeping essays written about other fields.) However, because this essay takes on so much, the treatment of some topics is superficial and at points it will likely seem meandering.

All that said, hopefully you can approach this essay as an outsider to biomedicine and come away with a high-level understanding of where the field has been, where it could head, and what it will take to get there. My aim is to abstract out and synthesize the big-picture trends, while simultaneously keeping things grounded in data (but not falling into the common trap of rehashing the research literature without getting to the heart of things, which in the case of biomedicine typically results in naive, indefinite optimism).

1. The Biomedical Problem Setting and Tool Review

Biomedical research is intimidating. At first glance, it seems to span so many subjects and levels of analysis as to be beyond general description. Consider all the subject areas on bioRxiv—how can one speak of the effect of cell stiffness on melanoma metastasis, the evolution of sperm morphology in water fleas, and barriers to chromatin loop extrusion in the same breath? Furthermore, research is accretive and evolving, the frontier constantly advancing in all directions, so this task becomes seemingly more intractable with time.

That said, biomedical research does not defy general description. Though researchers study thousands of different phenomena (as attested to by the thousands of unique research grants awarded by the NIH every year), the scales of which range from nanometers to meters, underneath these particularities lies a unified biomedical problem setting and research approach:

The purpose of biomedicine is to control the state of biological systems toward salutary ends. Biomedical research is the process of figuring out how to do this.

Biomedical research has until now been approached predominantly from a single research ethos. This ethos aims to control biological systems by building mechanistic models of them that are understandable and manipulable by humans (i.e., human-legible). Therefore, we will call this dominant research ethos the “mechanistic mind”.

The history of biomedical research so far has largely been the story of the mechanistic mind’s attempts to make biological systems more legible. Therefore, to understand biomedical research, we must understand the mechanistic mind.

Tools of the Mechanistic Mind

The mechanistic mind builds models of biology by observing and performing experiments on biological systems. To do this, it uses experimental tools.

Because they are the product of the mechanistic mind, these tools have evolved unidirectionally toward reductionism. That is, these tools have evolved to carve and chop biology into ever-smaller conceptual primitives that the mechanistic mind can build models over.

We’d like to understand how the mechanistic mind’s models of biology have evolved. However, delving into its particular phenomena of study—specific biological entities, processes, etc.—would quickly bog us down in details.

But we can exploit a useful heuristic: experimental tools determine the limits of our conceptual primitives, and vice versa. Therefore, we can tell the story of the mechanistic mind’s progress in understanding biology through the lens, as it were, of the experimental tools it has created to do so. By understanding the evolution of these tools, one can understand much of the history of biomedical research.

The extremely brief summary of this evolution goes:

In the second half of the 20th century, biomedical research became molecular (i.e., the study of nucleic acids and proteins). At the turn of the 21st century, with the (near-complete) sequencing of the human genome, molecular biology became computational. The rest is commentary.

Scopes, Scalpels, and Simulacra

That summary leaves a lot to be desired.

To make further sense of it, we can layer on a taxonomy of experimental tools, composed of three classes: scopes, scalpels, and simulacra.

“Scopes” are used to read state from biological systems.

“Scalpels” are used to perturb biological systems.

“Simulacra” are physical models that act as stand-ins for biological systems we’d like to experiment on or observe but can’t.

This experimental tool taxonomy is invariant across eras and physical scales of biomedical research, generalizing beyond any particular paradigm like cell theory or genomics. These three tool classes are, in a sense, the tool archetypes of experimental biomedical research.

Therefore, to understand the evolution in experimental tools (and, consequently, the evolution of the mechanistic mind), we can simply track advances in these three tool classes. We will pick up our (non-exhaustive) review around 15 years ago, near the beginning of the modern computational biology era, when tool progress starts to appreciably accelerate. (We will tackle scopes and scalpels now and leave simulacra for later.) I hope to convey a basic understanding of how these tools are used to interrogate biological systems, the rate they’ve been advancing at, and the resulting inundation of biomedical data we’ll soon face.

Scopes

To reiterate, scopes are tools that read state from biological systems. But this raises an obvious question: what is biological state?

As alluded to earlier, in the mid-20th century biological research became molecular, meaning it became the study of nucleic acids and proteins. Therefore, broadly speaking, biological state is information about the position, content, interaction, etc. of these molecules, and the larger systems they compose, within biological systems. Subfields of the biological sciences are dedicated to interrogating facets of biological state at different scales—structural biologists study the nanometer-scale folding of proteins and nucleic acids, cell biologists study the orchestrated chaos of these molecules within and between cells, developmental biologists study how these cellular processes drive the emergence of higher-order organization during development, and so on.

Regardless of the scale of analysis, advances in tools for reading biological state (i.e., scopes) occur along a few dimensions:

feature-richness

spatio-temporal resolution

spatio-temporal extent

throughput (as measured by speed or cost)

However, there are tradeoffs between these dimensions, and therefore they map out a scopes Pareto frontier.

In the past two decades, we’ve seen incredible advances along all dimensions of this frontier.

To illustrate these advances, we will restrict our focus to the evolution of three representative classes of scopes, each of which highlights a different tradeoff along this frontier:

extremely feature-rich single-cell methods

spatially resolved methods, which have moderate-to-high feature richness and spatio-temporal resolution

light-sheet microscopy, which has large spatio-temporal extent, high spatio-temporal resolution, and low feature-richness

By tracking the evolution of these methods over the past two decades, we’ll gain an intuition for the rate of progress in scopes and where they might head in the coming decades.

But we must first address the metatool which has driven many, but not all, of these advances in scopes: DNA sequencing.

Sequencing as Scopes Metatool

DNA sequencing is popularly known as a tool for reading genetic sequences, like an organism’s genome. But lesser known is the fact that sequencing can be indirectly used to read many other types of biological state. You can therefore think of sequencing as a near-universal solvent or sensor of the scopes class—much progress in scopes has simply come from discovering how to cash out different aspects of biological state in the language of A’s, T’s, G’s and C’s.

The metric of sequencing progress to track is the cost per gigabase ($/Gb): the cost of consumables for sequencing 1 billion base pairs of DNA. Bioinformaticians can fuss about the details—error rates, paired-end vs. single-read, throughput, read quality in recalcitrant regions of the genome like telomeres or repetitive stretches—but for our purposes this metric provides the single best index of progress in sequencing over the past two decades.

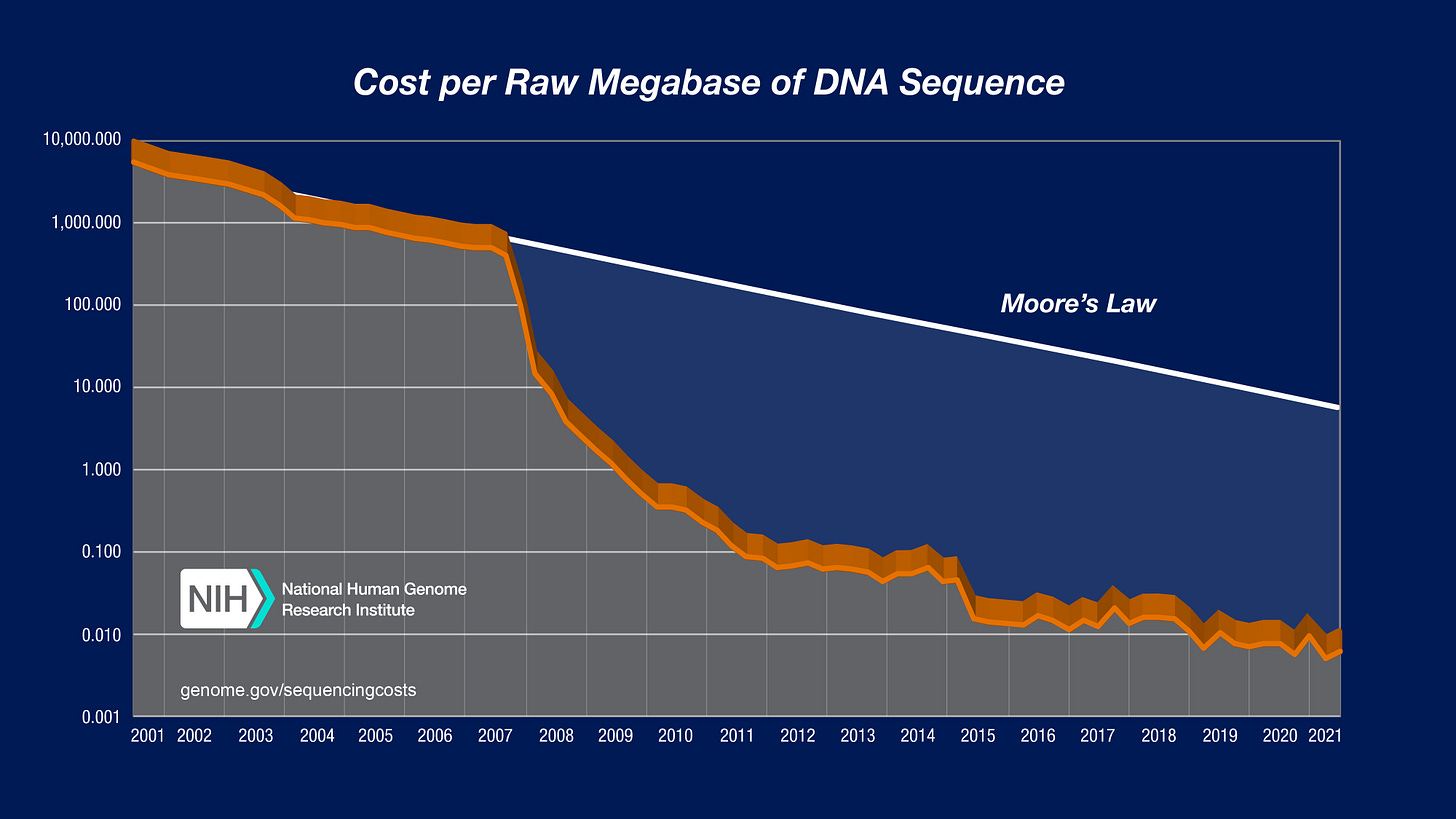

You’ve probably seen the famous NIH sequencing chart, which plots the cost per Mb of sequencing (1000 Mb equals 1 Gb). However, this chart is somewhat confusing—the curve clearly appears piecewise linear, with steady Moore’s-law-esque progress from 2001 to 2007, then a period of rapid cost decline from mid-2007 to around 2010, followed by a seeming reversion to the earlier rate of decline.

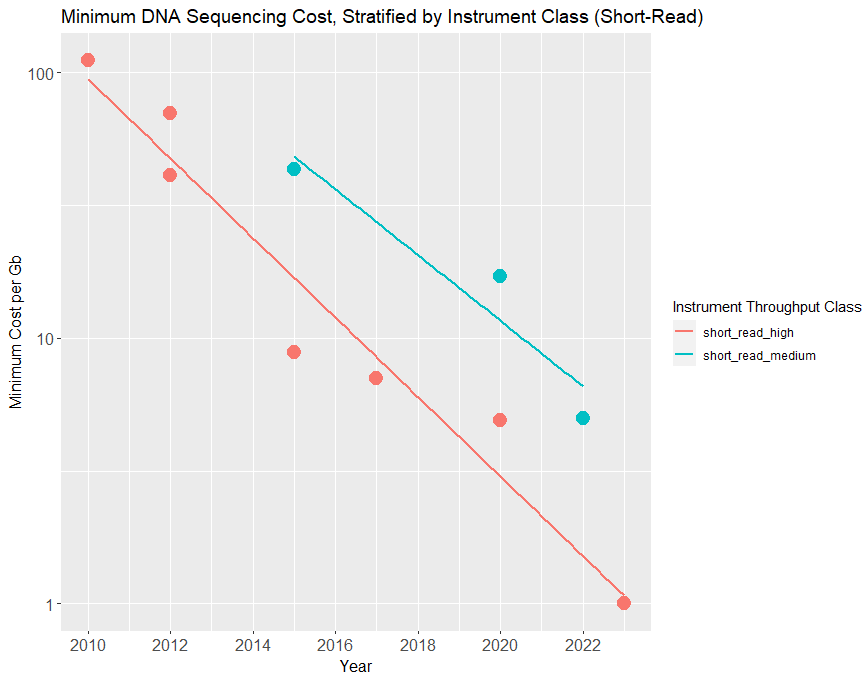

For the purposes of extrapolation, the current era of sequencing progress started around 2010 (when Illumina released the first in its line of HiSeq sequencers, the HiSeq2000). When we plot sequencing prices from then onward, restricting ourselves to short-read methods, we get the following plot.

Over the past 12 years, the price per gigabase on high-volume, short-read sequencers has declined by almost two orders of magnitude, halving roughly every 2 years—slightly slower than Moore’s law.

The first order of magnitude cost decline came in the 2010-2015 period with Illumina’s HiSeq line, the price of sequencing plummeting from ~$100/Gb to ~$10/Gb; this was followed by 5 years of relative stagnation, for reasons unknown; and in the past 2 years, there’s been another order of magnitude drop, with multiple competitors finally surpassing Illumina and approaching $1/Gb prices. The sequencing market is starting to really heat up, and that likely means the biennial doubling trend will hold; if it does, and if current prices are to be believed, then we can expect sequencing prices to hit $0.1/Gb around 2028-2029.

To make this trend more intuitive, we can explain it in terms of the cost of sequencing a human genome.

A haploid human genome (i.e., one of the two sets of 23 chromosomes the typical human has) is roughly 3 Gb (bases, not bytes) on average (e.g., the X chromosome is much larger than the Y chromosome, so a male’s two haploid genomes will differ in size). Therefore, sequencing this haploid genome at 30x coverage—meaning each nucleotide is part of (i.e., covered by) 30 unique reads on average—which is the standard for whole genome sequencing, results in ~90 Gb of data (call it 100 Gb to make the numbers round). So, we can use this 100 Gb human genome figure as a useful unit of measurement for sequencing prices.

In 2010 to 2011, a human genome cost somewhere in the $5,000 to $50,000 range; by 2015, the price had fallen to around $1000; and now, in 2022, it is allegedly nearing $100 (though this was already being claimed two years ago).

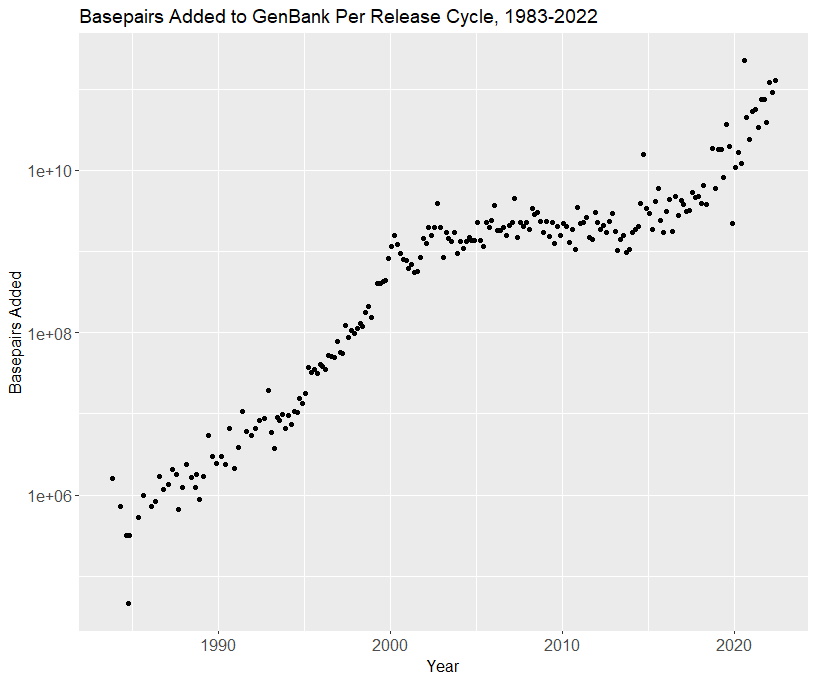

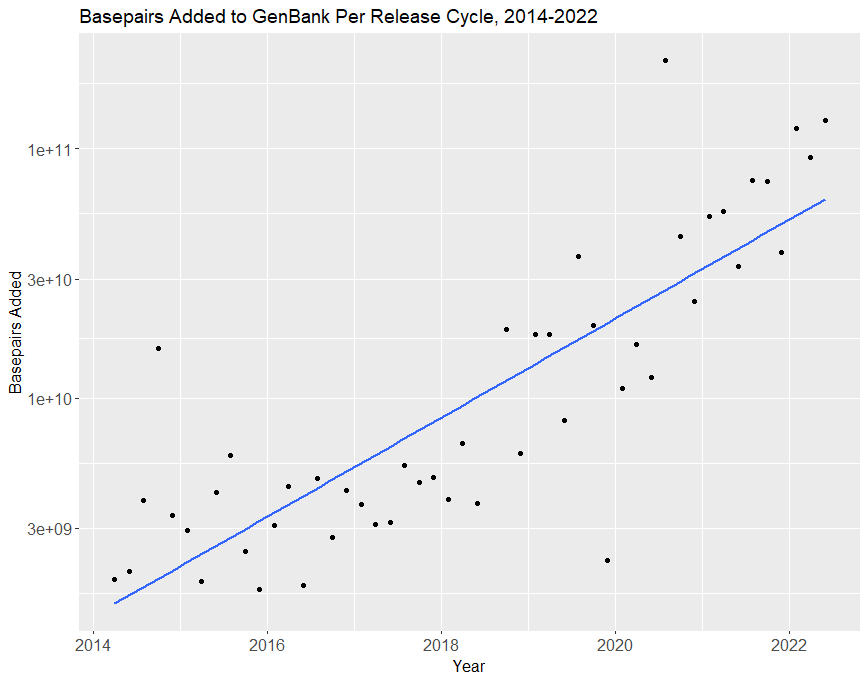

This exponential decline in price has led to a corresponding exponential increase in genome sequencing data. Since around 2014, the number of DNA bases added per release cycle to GenBank, the repository of all publically available DNA sequences, has doubled roughly every 18 months.

But as noted earlier, sequencing has many uses other than genome sequencing. Arguably, the revolution in reading non-genomic biological state has been the most important consequence of declining sequencing costs.

The Single-Cell Omics Revolution

Biological systems run off of nucleic acids and proteins, among other macromolecules. And because proteins are translated from RNA, all biological complexity ultimately traces back to the transformations of nucleic acids—epigenetic modification of DNA, transcription of DNA to RNA, splicing of RNA, etc. Sequencing-based scopes allow us to interrogate these nucleic acid-based processes.

We can divide the study of these processes into two areas: transcriptomics, the study of RNA transcripts, which are transcribed from DNA; and epigenomics, the study of modifications made to genetic material above the level of the DNA sequence, which can alter transcription.

Transcriptomics and epigenomics have been studied for decades. However, the past decade was an incredibly fertile period for these subjects due to the combination of declining sequencing costs and advances in methods for preparing biological samples for sequencing-based readout.

The defining feature of these sample preparation methods has been their biological unit of analysis: the single cell.

It’s not inaccurate to call the past decade of computational biology the decade of single-cell methods. The ability to read epigenomic and transcriptomic state at single-cell resolution has revolutionized the study of biological systems and is the source of much current biomedical optimism.

Applications of Single-Cell Omics

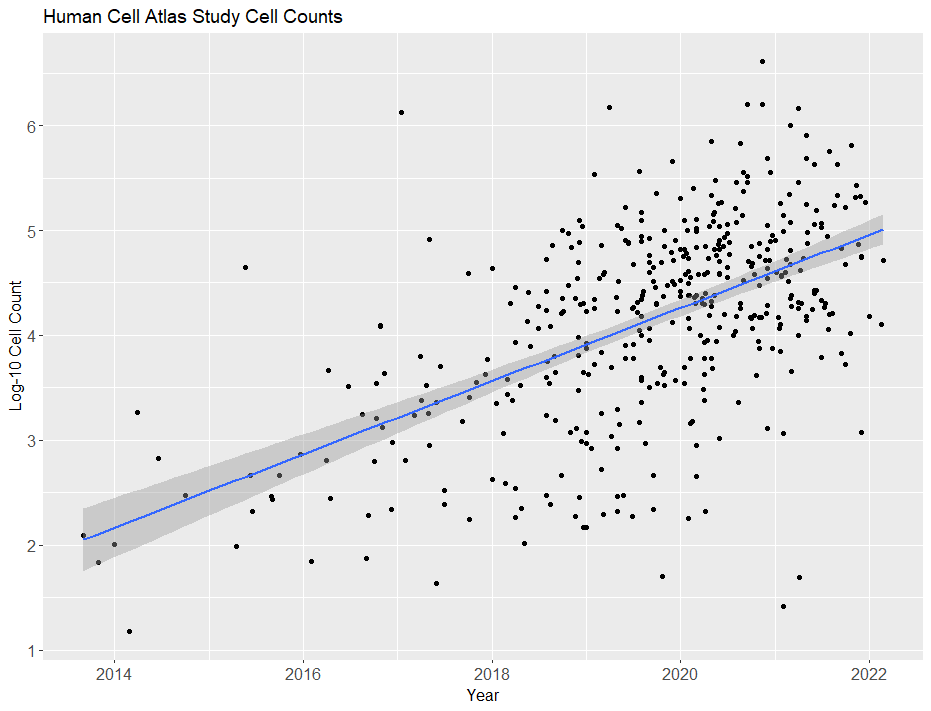

To understand how much single-cell methods have taken off, consider the following chart:

This is a plot of the number of cells in each study added to the Human Cell Atlas, which aims to “create comprehensive reference maps of all human cells—the fundamental units of life—as a basis for both understanding human health and diagnosing, monitoring, and treating disease.” The number of cells per study has been increasing by an order of magnitude a little under every 3 years—and the frequency with which studies are added is increasing too.

The HCA explains their immense ambitions like so:

Cells are the basic units of life, but we still do not know all the cells of the human body. Without maps of different cell types, their molecular characteristics and where they are located in the body, we cannot describe all their functions and understand the networks that direct their activities.

The Human Cell Atlas is an international collaborative consortium that charts the cell types in the healthy body, across time from development to adulthood, and eventually to old age. This enormous undertaking, larger even than the Human Genome Project, will transform our understanding of the 37.2 trillion cells in the human body.

The way these human cells are charted is by reading out their internal state via single-cell methods. That is, human tissues (usually post mortem, though for blood and other tissues this isn’t always the case) are dissociated into individual cells, and then these cells’ contents are assayed (i.e., profiled, or read out) along one or more dimensions of transcriptomic or epigenomic state.

Crucially, these assays rely on sequencing for readout. In the case of single-cell RNA sequencing, the RNA transcripts inside the cells are reverse transcribed into complementary DNA sequences, which are then read out by sequencers. But sequencing can be used to read out other types of single-cell state that aren’t natively expressed in RNA or DNA—chromatin conformation, chromatin accessibility, and other epigenomic modifications—which typically requires a slightly more convoluted library preparation.

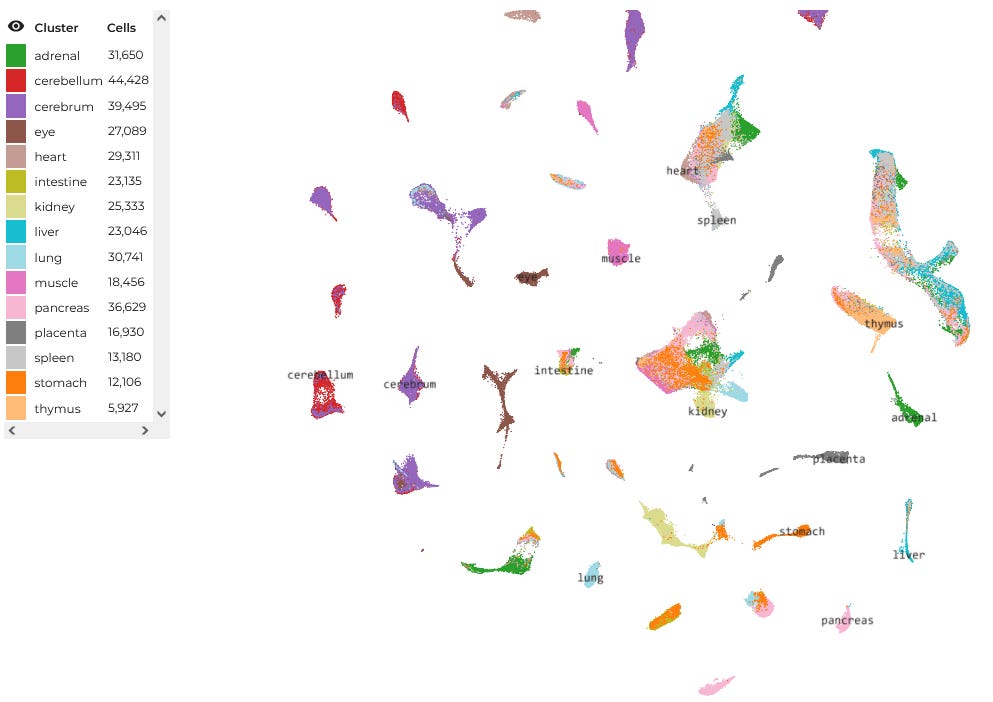

The upshot is that these omics profiles, as they are called, act as proxies for the cells’ unique functional identities. Therefore, by assaying enough cells, one can develop a “map” of single-cell function, which can be used to understand the behavior of biological systems with incredible precision. Whereas earlier bulk assays lost functionally consequential inter-cellular heterogeneity in the tissue-average multicellular soup, now this heterogeneity can be resolved in its complete, single-cell glory. These single-cell maps look like so (this one is of a very large human fetal cell atlas, which you can explore here):

And the Human Cell Atlas is the tip of the iceberg—single-cell omics methods have taken the entire computational biology field by storm. These methods have found numerous applications: comparing cells from healthy and diseased patients; tracking the differentiation of a particular cell type to determine the molecular drivers, which might go awry in disease; and comparing single-cell state under different perturbations, like drugs or genetic modifications. Open up any major biomedical journal and you’re bound to see an article with single-cell omics data.

But this rapid expansion of single-cell data is only made possible by continual advances in methods for isolating and assaying single cells.

Single-Cell Omic Technologies

Over the past decade or so, single-cell sample preparation methods have advanced along two axes: throughput (as measured by cost and speed) and feature-richness (as measured by how many omics profiles can be assayed at once per cell and the resolution of these assays).

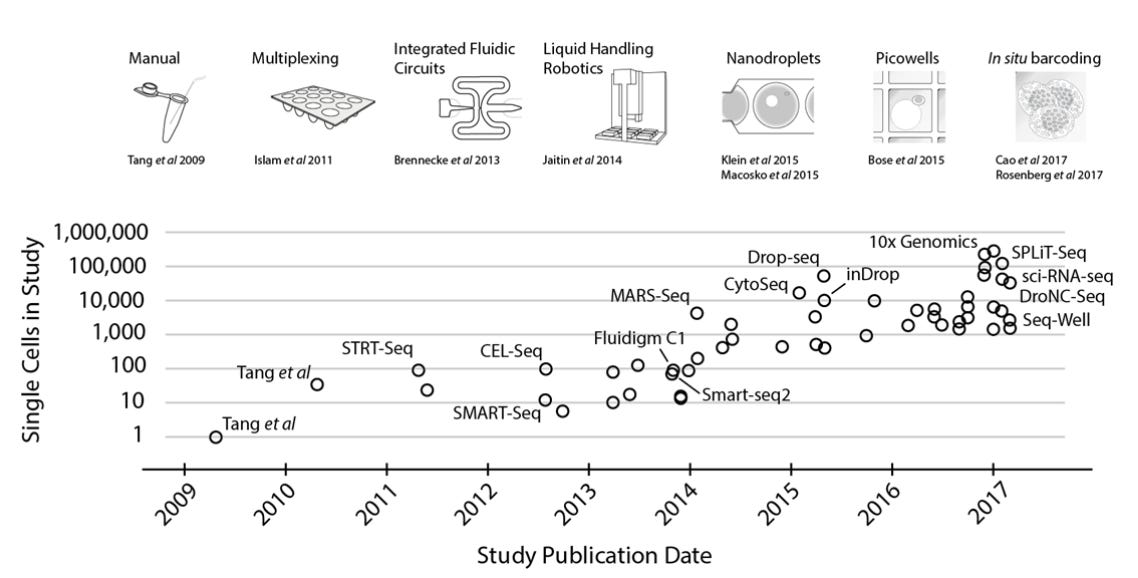

Svensson et al. explain the exponential increase in single-cell transcriptomic throughput over the past decade as the result of multiple technical breakthroughs in isolating and processing single cells at scale:

A jump to ~100 cells was enabled by sample multiplexing, and then a jump to ~1,000 cells was achieved by large-scale studies using integrated fluidic circuits, followed by a jump to several thousands of cells with liquid-handling robotics. Further orders-of-magnitude increases bringing the number of cells assayed into the tens of thousands were enabled by random capture technologies using nanodroplets and picowell technologies. Recent studies have used in situ barcoding to inexpensively reach the next order of magnitude of hundreds of thousands of cells.

That is, once a tissue has been dissociated into single cells, the challenge then becomes organizational: how do you isolate, process, and track the contents of these cells? In the case of transcriptomics, at some point the contents of the cell must undergo multiple steps of library preparation to transform RNA transcripts into DNA, and then these DNA fragments must be exposed to the sequencer for readout—all without losing track of which transcript came from which cell.

As can be seen in the graph, throughput began to really take off around 2013. But these methods have continued to advance since the above graph was published.

For instance, combinatorial indexing methods went from profiling 50,000 nematode cells at once in 2017, to profiling two million mouse cells at once in 2019, to profiling (the gene expression and chromatin accessibility of) four million human cells at once in 2020. With these increases in scale have come corresponding decreases in price per cell—even in the past year, sci-RNA-seq3, the most recent in this line of combinatorial indexing methods, was further optimized, making it ~4x less expensive than before, nearing costs of $0.003 per cell at scale.

Costs have also declined among commercial single-cell preparation methods. 10X Genomics, the leading droplet-based single-cell sample preparation company, offers single-cell RNA sequencing library preparation at a cost of roughly $0.5 per cell. But Parse Biosciences, which, like sci-RNA-seq3, uses combinatorial indexing, recently claimed its system can sequence up to a million cells at once for only $0.09 per cell. (Though one can approach these library preparation prices on a 10x chip by craftily multiplexing and deconvoluting—that is, by labeling cells from different samples, multiple cells can be loaded into the same droplet, and the droplet readout can be demultiplexed (i.e., algorithmically disentangled) after the fact, thereby dropping the cost per cell.)

Thus, like with sequencing, there’s an ongoing gold-rush in single-cell sample preparation methods, and commercial prices should only decline further—especially given that the costs of academic methods are already an order of magnitude lower.

The second dimension along which single-cell methods have progressed in the richness of their readout.

In the past few years, we’ve seen an efflorescence of so-called “multi-omic” methods, which simultaneously profile multiple omics modalities in a single-cell. For instance, one could assay gene expression along with an epigenomic modality, like chromatin accessibility, and perhaps even profile cellular surface proteins at the same time. The benefit of multi-omics is that different modalities share complementary info; often this complementary information aids in mechanistically interrogating the dynamics underlying some process—e.g., one can investigate if increases in chromatin accessibility near particular genes precede upregulation of those genes.

Yet not only can we now profile more modalities simultaneously, but we can do so at higher resolution. To give a particularly incredible example, the maximum resolution of (non-single-cell) sequencing-based chromatin conformation assays went from 1 Mb in 2009, to ~5 kb in 2020, to 20 base pairs in 2022—an increase in resolution of over four orders of magnitude. Since we’re nearing the limits of resolution, future advances will likely come from sparse input methods, like those that profile single cells, and increased throughput.

Thus, improvements in sequencing and single-cell sample preparation methods have revolutionized our ability to read out state from biological systems.

But as great as single-cell methods are, their core limitation is non-existent spatio-temporal resolution. That is, because these methods require destroying the sample, we get only a snapshot of cellular state, not a continuous movie—the best we can do is reconstructing pseudo-temporal trajectories after the fact based on some metric of cell similarity (which is perhaps one of the most influential ideas in the past decade of computational biology), though some methods are attempting to address this temporal limitation. And because we dissociate the tissue before single-cell sample preparation, all spatial information is lost.

However, this latter constraint is addressed by a different set of scopes: spatial profiling methods.

Spatial Profiling Techniques

If single-cell omics methods defined the 2010’s, then spatial profiling methods might define the early 2020’s. These methods readout nucleic acids along with their spatial locations. This information is valuable for obvious reasons—cells don’t live in a vacuum, and spatial organization plays a large role in multicellular interaction.

We’ll briefly highlight two major categories of these methods: sequencing-based spatial transcriptomics, which resolve spatial location via sequencing, and fluorescence in situ hybridization (FISH) approaches, which resolve spatial location via microscopy.

Sequencing-Based Spatial Transcriptomics

The premise of sequencing-based spatial transcriptomics is simple: rather than randomly dissociating a tissue before single-cell sequencing, thereby losing all spatial information, RNA transcripts can be tagged with a “barcode” based on their location, which can later be read out via sequencing alongside the transcriptome, allowing for spatial reconstruction of the locations of the transcripts.

These barcodes are applied by placing a slice of tissue on an array covered with spots that have probes attached to them. When the tissue is fixed and permeabilized, these probes capture the transcripts in the cells above them; then, complementary DNA sequences are synthesized from these captured transcripts, with specific spatial barcodes attached depending on the location of the spot. When these DNA fragments are sequenced, the barcodes are read out with the transcripts and used to resolve their spatial positions.

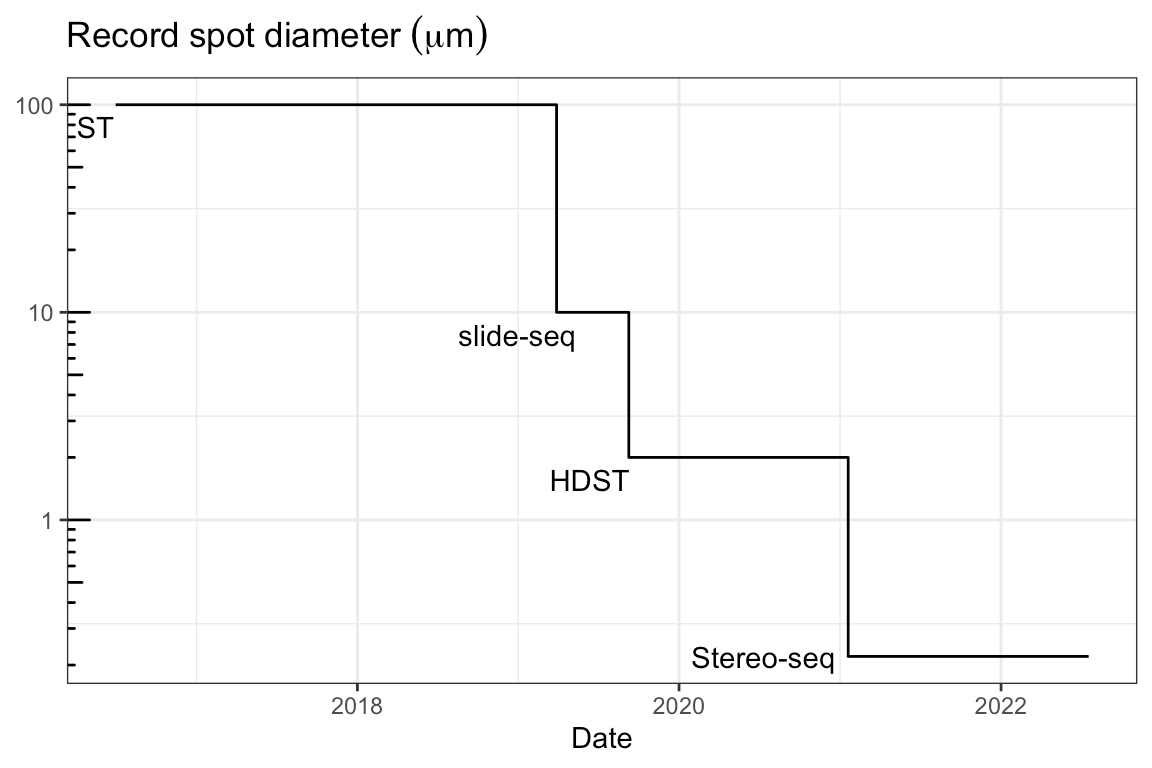

One major dimension of advance of these methods is spatial resolution, as measured by the size of the spots which RNA transcripts bind to, which determines how many cells are mapped to a single spot, and therefore the resolution with which gene expression can be resolved. Over just the past 3 years, maximum resolution has jumped by almost three orders of magnitude (image source):

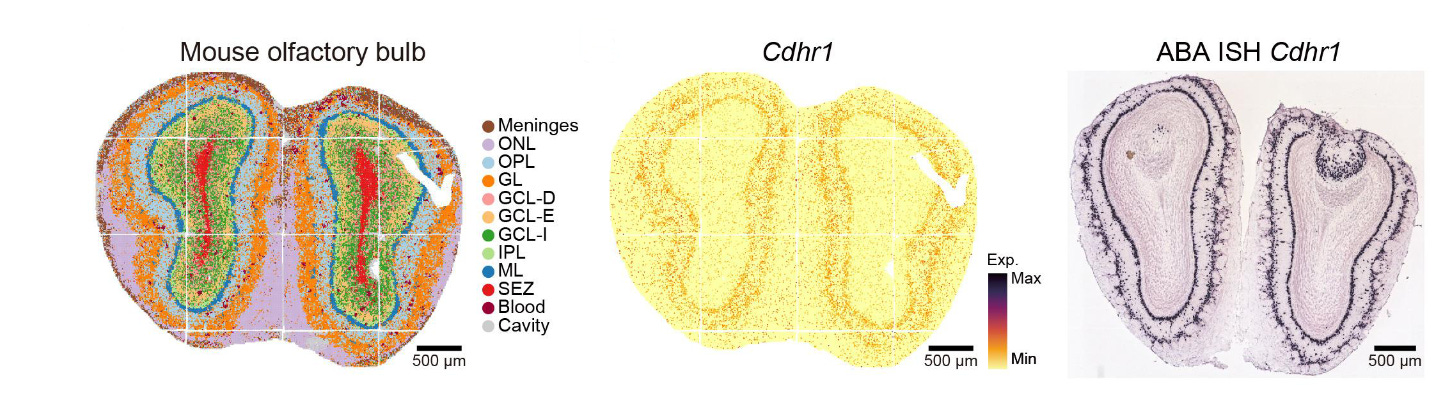

These spatial transcriptomics methods produce stunning images. For instance, here’s a section of the developing mouse brain as profiled by the currently highest resolution method, Stereo-seq:

Each dot in the middle pane represents the intensity of the measured gene at that location, but plots of this sort can be generated for all the tens of thousands of genes assayed via RNA sequencing. In the left pane, these complete gene expression profiles are used to assign each dot to its predicted average cell-type cluster, as one might do with non-spatial single-cell transcriptomics.

Yet note the resolution in the rightmost pane of the above figure—it is even greater than that of the middle pane. This image uses in situ hybridization, the basis of the spatial profiling technique we’ll explore next.

smFISH

Fluorescent in situ hybridization (FISH) methods trade off feature-richness for increased spatial resolution. That is, FISH-based methods don’t assay the RNA of every single protein-coding gene like sequencing-based methods do, but in return they localize the transcripts they do assay better.

Instead of using sequencing for readout, these methods use the other near-universal solvent or sensor of the scopes class: microscopy.

That is, whereas spatial transcriptomics resolve the location of transcripts indirectly via sequencing barcodes, FISH methods visually resolve location via microscopy. They do this by repeatedly hybridizing (i.e., binding) complementary DNA probes to targeted nucleic acids in the tissue (attached to these probes are fluorophores which emit different colors of light); after multiple rounds of hybridization and fluorescent emission from these probes, the resulting multi-color image can be deconvolved, the “optical barcodes” used to localize hundreds of genes at extremely high spatial resolution.

Unlike spatial transcriptomics, which is a hypothesis-free method that doesn’t (purposefully) select for particular transcripts, in FISH the genes to probe must be selected in advance, and typically they number in the tens or hundreds, not the tens of thousands like with spatial transcriptomics (though in principle they can reach these numbers—see below). The number of genes that can be resolved per sample (i.e., multiplexed) is limited by the size and error-rates of the fluorescent color palette that is used to mark them, and the spatial resolution with which these transcripts are localized is limited by the sensor’s ability to distinguish these increasingly crowded fluorescent signals (perhaps so crowded that the distance between them falls beneath the diffraction limit of the sensor).

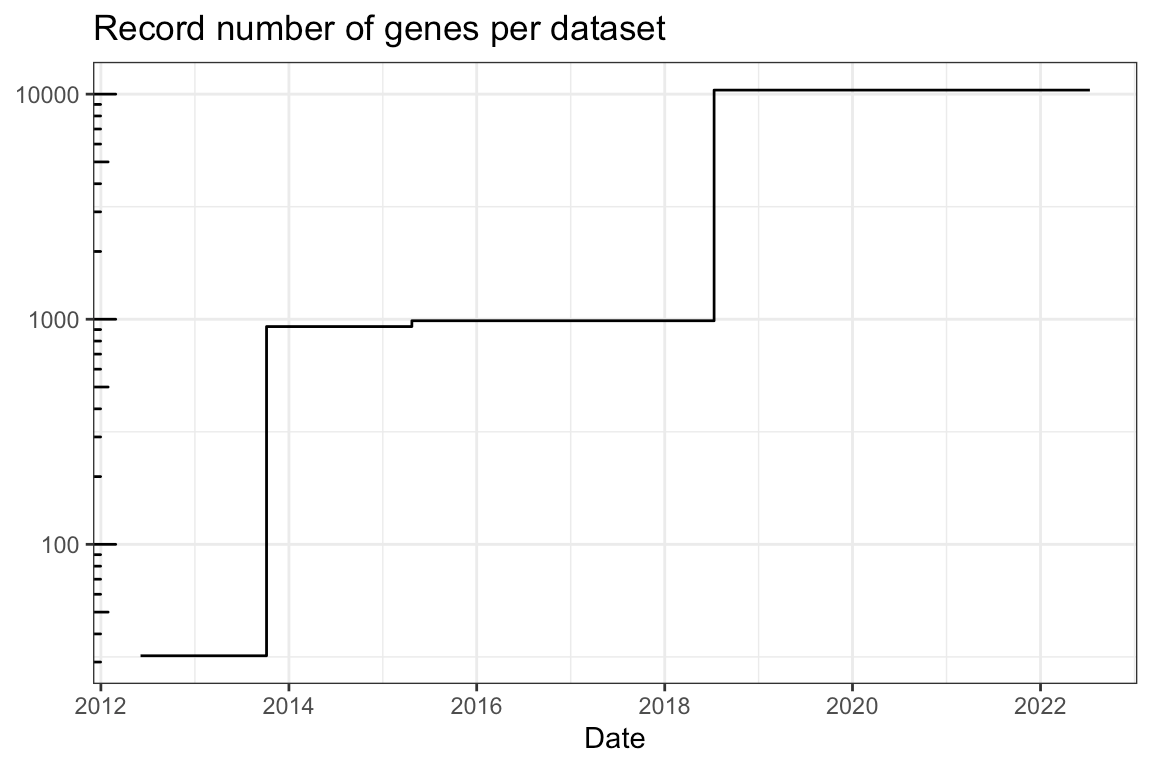

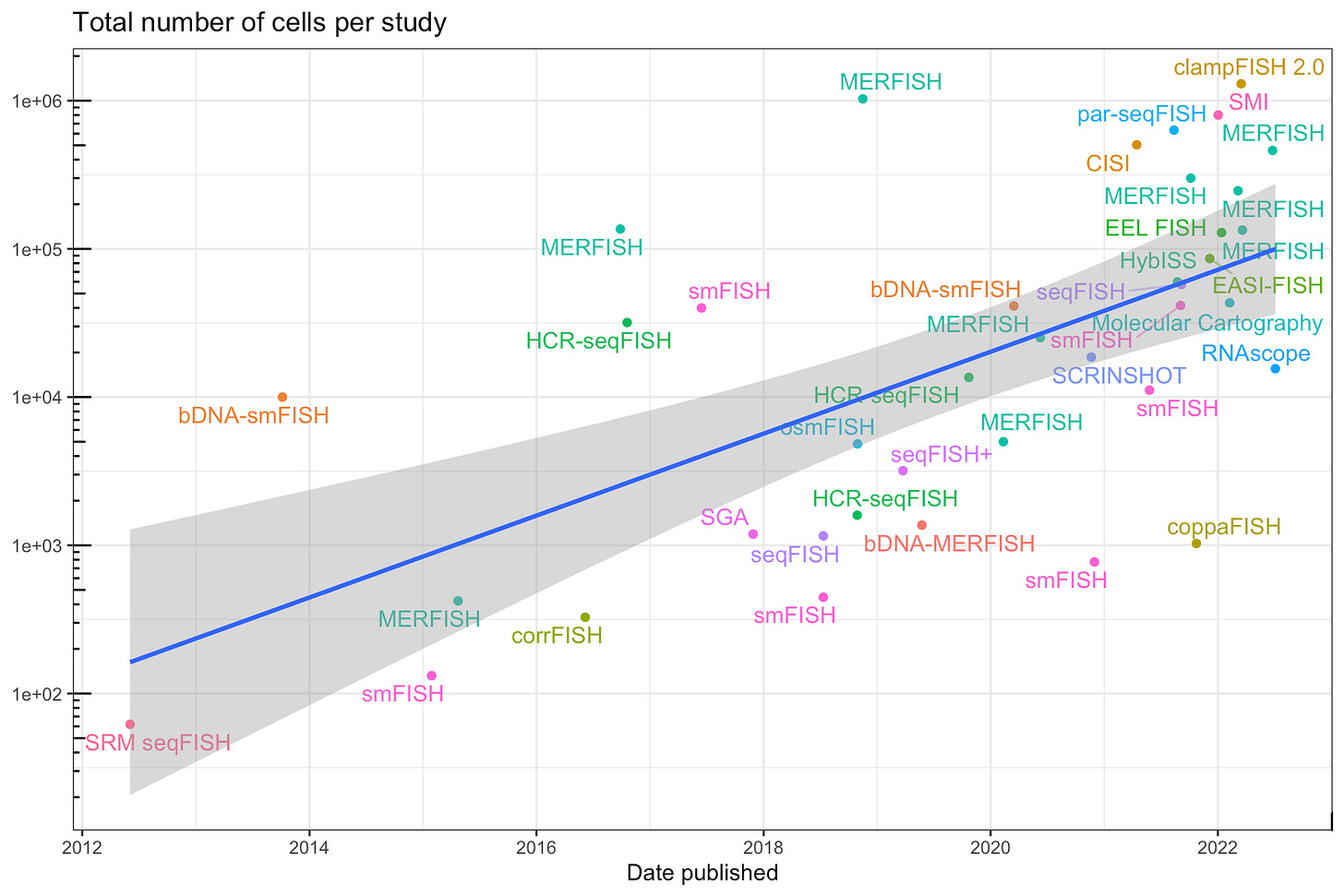

The general idea of FISH has been around for over 50 years, but the current generation of multi-gene single-molecule FISH (smFISH) methods only began to take off around 15 years ago. Since then, there’s been a fair deal of progress in gene multiplexing and the number of cells that can be profiled at once (images source):

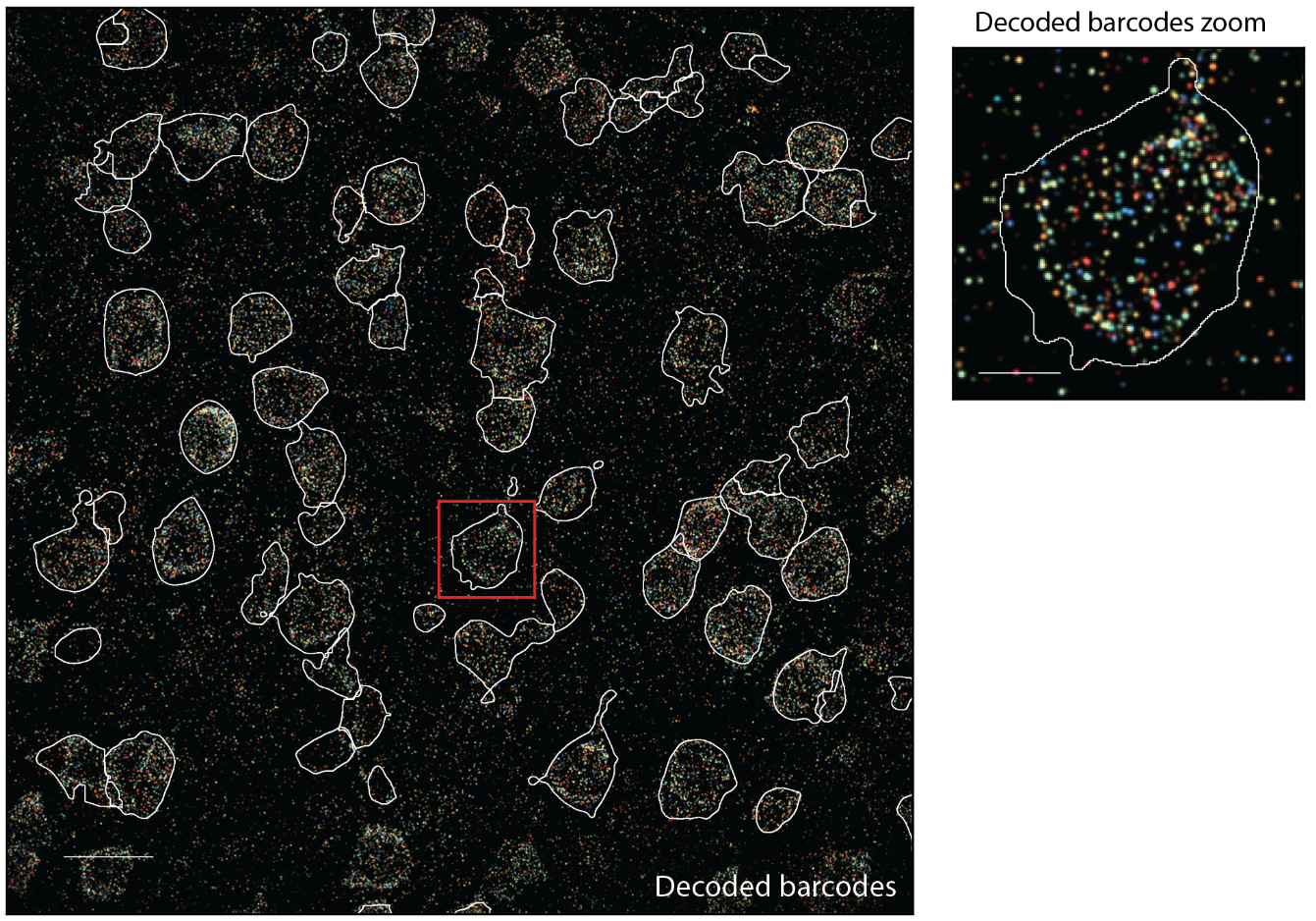

But the best way to understand these advances is visually. For instance, we can look at MERFISH, a technique which has been commercialized, part of a growing market for FISH-based spatial profiling methods. Here’s what part of a coronal slice of an adult mouse brain, with more than 200 genes visualized, looks like (the scale-bar is 20 microns in the left pane and 5 microns in the right pane):

The amount of data these methods generate is immense:

As a point of reference, the raw images from a single run of MERFISH for a ~1 cm^2 tissue sample contain about 1 TB of data.

But like spatial transcriptomics, these smFISH methods have one major drawback: though they can spatially resolve extremely feature-rich signals, they lack temporal resolution—that is, they image dead, static tissues, a problem addressed by longitudinal methods like light-sheet microscopy.

Light-Sheet Microscopy

Biological systems operate not only across space, but across time.

Methods like light-sheet fluorescence microscopy (LSFM) trade off feature-richness in exchange for this temporal resolution, all while maintaining high spatial extent and resolution. The niche filled by LSFM is explained as follows:

Fluorescence microscopy in concert with genetically encoded reporters is a powerful tool for biological imaging over space and time. Classical approaches have taken us so far and continue to be useful, but the pursuit of new biological insights often requires higher spatiotemporal resolution in ever-larger, intact samples and, crucially, with a gentle touch, such that biological processes continue unhindered. LSFM is making strides in each of these areas and is so named to reflect the mode of illumination; a sheet of light illuminates planes in the sample sequentially to deliver volumetric imaging. LSFM was developed as a response to inadequate four-dimensional (4D; x, y, z and t) microscopic imaging strategies in developmental and cell biology, which overexpose the sample and poorly temporally resolve its processes. It is LSFM’s fundamental combination of optical sectioning and parallelization that allows long-term biological studies with minimal phototoxicity and rapid acquisition.

That is, LSFM gives us the ability to longitudinally profile large 3D living, intact specimens at high spatial and temporal resolution. Thus, it plays an important complementary role to moderately feature-rich spatial transcriptomic methods and extremely feature-rich single-cell methods, both of which lack temporal resolution.

Needless to say, the technology underlying LSFM has advanced quite a lot over the past two decades. The beautiful thing about spatio-temporally resolved methods is that we can easily witness these advances with our eyes. For instance, we can compare the state of the art in imaging fly embryogenesis in 2004 vs. in 2016:

Yet in just the past year, we have seen further advances still. A new, simplified system has improved imaging speed while maintaining sub-micron lateral and sub-two-micron axial resolution—and on large specimens, no less.

And recently an LSFM method was developed that can image tissues at subcellular resolution in situ, without the need for exogenous fluorescent dyes—in effect, a kind of in vivo 3D histology. The applications of this technology, to both research and diagnostics, are numerous.

Here’s the stitching together of a 3D-resolved (but not temporally resolved) slice of in situ mouse kidney:

And here’s real-time imaging of a fluorescent tracer dye perfusing mouse kidney tubules in situ:

Scopes Redux

The scopes Pareto frontier has advanced tremendously over the past two decades, and on many dimensions at a surprisingly regular rate. This is perhaps the most exciting development in all of biomedical research.

However, the ability to read state from biological systems doesn’t alone much improve our understanding of them—for that, we must perturb them.

Scalpels

Scalpels are used to experimentally perturb biological systems. The dimensions of the scalpels Pareto frontier are similar to those of the scopes Pareto frontier:

feature precision

spatio-temporal precision

spatio-temporal extent

throughput

However, the past decade has been one dominated by advances in scopes, not scalpels. One metric of this dominance is the annual Nature method of the year, which is a good gauge for what tools are becoming popular among biological researchers. Among the past 15 winners, two are scalpels (optogenetics and genome editing), two are related to simulacra (iPSC and organoids), and the rest are scopes (NGS, super-resolution fluorescence microscopy, targeted proteomics, single-cell sequencing, LSFM, cryo-EM, sequencing-based epitranscriptomics, imaging of freely behaving animals, single-cell multiomics, and spatial transcriptomics) or analytical methods (protein structure prediction).

Scalpels have simply experienced far narrower progress over the past decade or so than scopes. Advances occurred mostly along the feature-precision and throughput dimensions within a single suite of tools, which we will restrict our attention to (and therefore this section will be comparatively short).

Genome and Epigenome Editing

The most notable advance in scalpels has been in our ability to perturb the genome and epigenome with high precision, at scale. Of course, we’re referring to the technology of CRISPR, which researchers successfully appropriated from bacteria a decade ago (though there’s some disagreement about whom the credit should go to).

When one thinks of CRISPR, they likely think about making DNA double-strand breaks (DSB) in order to knock out (i.e., inhibit the function of) whole genes. But CRISPR is a general tool for using guide RNAs to direct nucleases (enzymes which cut DNA or RNA, like the famous Cas9) to specific regions of the genome—in effect, a kind of nuclease genomic homing guidance system. By varying the nuclease one uses and the molecules attached to them, a variety of functions other than knockouts can be performed: targeted editing of DNA without DSB, inhibiting gene expression without DSB, activating gene expression, editing epigenetic marks, and RNA editing and interference.

CRISPR is not the first system to perform most of these functions, and it certainly isn’t perfect—transfection rates are still low and off-target effects still common—but that’s beside the point: the defining features of CRISPR are its generality and ease of use. If sequencing and microscopy are the universal sensors of the scopes tool class, then CRISPR might be the universal genomic actuator of the scalpels tool class.

In conjunction, these scopes and scalpels have enabled interrogating the genome at unprecedented resolution and throughput.

Using Scopes and Scalpels to Interrogate the Genome and Beyond

By perturbing the genome and observing how the state of a biological system shifts, we can infer the mechanistic structure underlying that biological system. Advances in scopes and scalpels have made it possible to do this at massive scale.

For instance, one could use CRISPR to systematically knockout every single gene in the genome across a set of samples (perhaps with multiple knockouts per sample), a so-called genome-wide knockout screen. But whereas previously the readout of these screens was limited to simple phenotypes like fitness (that is, does inhibiting a particular gene produce lethality, which can be inferred by counting the guide RNAs in the surviving samples) or a predefined set of gene expression markers, due to advances in scopes we can now read out the entire transcriptome from every sample.

Over the past five years, a lineage of papers has pursued this sort of (pooled) genome-wide screening with full transcriptional readout at increasing scale. In one of the original 2016 papers, only around 100 genes were knocked out across 200,000 mouse and human cells; yet in one of the most recent papers, all 10,000+ protein-coding genes are inhibited across more than 2.5 million human cells, with full transcriptional readout.

Yet the genome is composed of more than protein-coding genes, and ideally we’d like to systematically perturb non-protein-coding regions, which play an important role in the regulation of gene expression. The usefulness of such screens would be immense:

The human genome is currently believed to harbour hundreds-of-thousands to millions of enhancers—stretches of DNA that bind transcription factors (TFs) and enhance the expression of genes encoded in cis [i.e., on the same DNA strand]. Collectively, enhancers are thought to play a principal role in orchestrating the fantastically complex program of gene expression that underlies human development and homeostasis. Although most causal genetic variants for Mendelian disorders fall in protein-coding regions, the heritable component of common disease risk distributes largely to non-coding regions, and appears to be particularly enriched in enhancers that are specific to disease-relevant cell types. This observation has heightened interest in both annotating and understanding human enhancers. However, despite their clear importance to both basic and disease biology, there is a tremendous amount that we still do not understand about the repertoire of human enhancers, including where they reside, how they work, and what genes they mediate their effects through.

In 2019, the first such massive enhancer inhibition screen with transcriptional readout was accomplished, inhibiting nearly 6,000 enhancers across 250,000 cells, an important step to systematic interrogation of all gene regulatory regions.

But inhibition and knockout are blunt methods of perturbation. To truly understand the genome, we must systematically mutate it. Unfortunately, massively parallel methods for profiling the effects of fine-grain enhancer mutations don’t yet read out transcriptional state at scale, instead opting to trade off readout feature richness for screening throughput via the use of reporter gene assays. However, we’ll likely see fine-grain enhancer mutation screens with transcriptional readout in the coming years.

Yet advances in scopes enable us to interrogate the effects of not only genomic perturbations, but therapeutic chemical perturbations, too:

High-throughput chemical screens typically use coarse assays such as cell survival, limiting what can be learned about mechanisms of action, off-target effects, and heterogeneous responses. Here, we introduce “sci-Plex,” which uses “nuclear hashing” to quantify global transcriptional responses to thousands of independent perturbations at single-cell resolution. As a proof of concept, we applied sci-Plex to screen three cancer cell lines exposed to 188 compounds. In total, we profiled ~650,000 single-cell transcriptomes across ~5000 independent samples in one experiment. Our results reveal substantial intercellular heterogeneity in response to specific compounds, commonalities in response to families of compounds, and insight into differential properties within families. In particular, our results with histone deacetylase inhibitors support the view that chromatin acts as an important reservoir of acetate in cancer cells.

However, though impressive, it’s an open question whether such massive chemical screens (and all the tool progress we’ve just reviewed) will translate into biomedical progress.

2. The End (of Biomedical Stagnation) Is Nigh

Progress in experimental tools over the past two decades has been remarkable. It certainly feels like biomedical research has been completely revolutionized by these tools.

This revolution is already yielding advances in basic science. To name but a few:

Our understanding of longevity has advanced tremendously, from the relationship between mutation rates and lifespan among mammals, to the molecular basis of cellular senescence and rejuvenation (which could have huge clinical implications).

Open up any issue of Nature or Science and you’re bound to see a few amazing computational biology articles interrogating some biological mechanism with extreme rigor, likely using the newest tools.

And how can one forget Alphafold, the solution to a 50-year-old grand challenge in biology. “It will change medicine. It will change research. It will change bioengineering. It will change everything.” (Well, it isn’t necessarily a product of the tools revolution, but it is a major leap in basic science that contributes to the current mood of optimism.)

Biomedical optimism abounds for other reasons, too. Consider some of the recent clinical successes with novel therapeutic modalities:

Gene therapy (i.e., genetically edited autologous stem cell transplants) seem poised to solve terrible blood disorders like sickle cell disease and familial hypercholesterolemia.

RNA vaccines solved SARS-CoV-2, so maybe they’ll solve other infectious diseases, like HIV. Perhaps they’ll even solve pancreatic cancer. (Or how about the immuno-oncology double-whammy: combining CAR-T and RNA vaccines to treat solid tumors.)

It certainly feels like this confluence of factors—Moore’s-law-like progress in experimental tools, the ever-increasing mountain of biological knowledge they are generating, and a bevy of new therapeutic modalities that are already delivering promising clinical results—is ushering in the biomedical golden-age. Some say that “almost certainly the great stagnation is over in the biomedical sciences.”

How much credence should we give this feeling—are claims of the end of biomedical stagnation pure mood affiliation?

Premature Celebration

A good place to start would be to define what the end of biomedical stagnation might look like.

One of the necessary, but certainly not sufficient, conditions would be the normalization of what is often called personalized/precision/genomic medicine. Former NIH Director Francis Collins sketched what this world would look like:

…The impact of genetics on medicine will be even more widespread. The pharmacogenomics approach for predicting drug responsiveness will be standard practice for quite a number of disorders and drugs. New gene-based “designer drugs” will be introduced to the market for diabetes mellitus, hypertension, mental illness, and many other conditions. Improved diagnosis and treatment of cancer will likely be the most advanced of the clinical consequences of genetics, since a vast amount of molecular information already has been collected about the genetic basis of malignancy…it is likely that every tumor will have a precise molecular fingerprint determined, cataloging the genes that have gone awry, and therapy will be individually targeted to that fingerprint.

Here’s the kicker: that quote is from 2001, part of Collins’ 20-year grand forecast about how the then-recently-accomplished Human Genome Project would revolutionize the future of medicine (i.e., what was supposed to be the medicine of today). Unfortunately, his forecast didn’t fare well.

Though the 2000’s witnessed “breathtaking acceleration in genome science,” by the halfway point, things weren’t looking good. But Collins held out hope:

The consequences for clinical medicine, however, have thus far been modest. Some major advances have indeed been made…But it is fair to say that the Human Genome Project has not yet directly affected the health care of most individuals…

Genomics has had an exceptionally powerful enabling role in biomedical advances over the past decade. Only time will tell how deep and how far that power will take us. I am willing to bet that the best is yet to come.

Another ten years later, it seems his predictions still haven’t been borne out.

Precision oncology fell short of the hype. Only ~7% of US cancer patients are predicted to benefit from genome-targeted therapy. And oncology drugs approved for a genomic indication have a poor record in improving overall survival in clinical trials (good for colorectal cancer and melanoma; a coin-toss for breast cancer; and terrible for non-small cell lung cancer). What we call genome-targeted therapy is merely patient stratification based on a few markers, not the tailoring of therapies based on a “molecular fingerprint”.

There are no blockbuster “gene-based designer drugs” for the chronic diseases he mentions.

Pharmacogenomics is the exception, not the norm, in the clinic. The number of genes with pharmacogenomic interactions is now up to around 120 (though only a subset of interactions are clinically actionable). And, as shown in the precision oncology case, oftentimes the use of genetic markers has little impact on the outcomes of the majority of patients.

Thus, despite the monumental accomplishment of the Human Genome Project, and the remarkable advances in tools that followed from it, we have not yet entered the golden-age of biomedicine that Collins foretold.

(But don’t worry: though the precision medicine revolution has been slightly delayed, we can expect it to arrive by 2030.)

Biomedical Stagnation Example: Cancer

The optimist might retort: that’s cherry-picking. Even though genomics hasn’t yet had a huge medical impact, and even though Collins’ specific predictions weren’t realized, he is directionally correct: biomedical stagnation is already ending. Forget about tools—just look at all the recent clinical successes.

Take cancer, for instance. Many indicators seem positive: five-year survival rates are apparently improving, and novel therapeutic modalities like immunotherapy and cell therapy are revolutionizing the field. Hype is finally catching up with reality.

Yes, there have undoubtedly been some successes in cancer therapeutics over the past few decades—Gleevec cured (that is not an exaggeration) chronic myeloid leukemia; and checkpoint inhibitors have improved treatment of melanoma, NSCLC, and a host of other cancers.

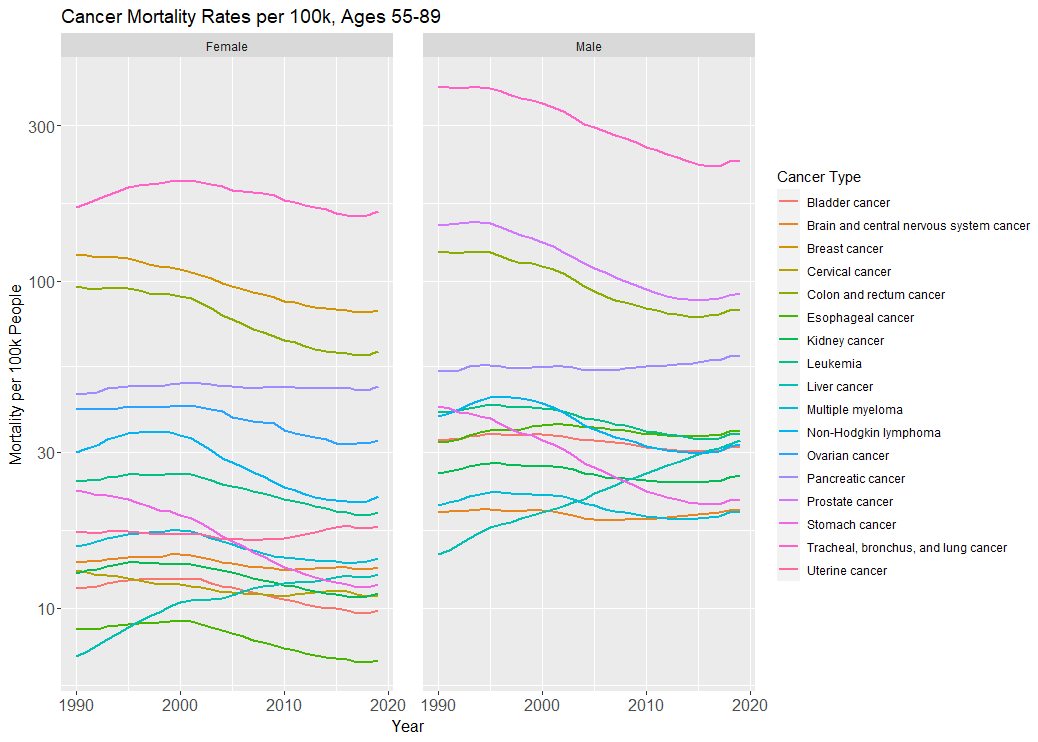

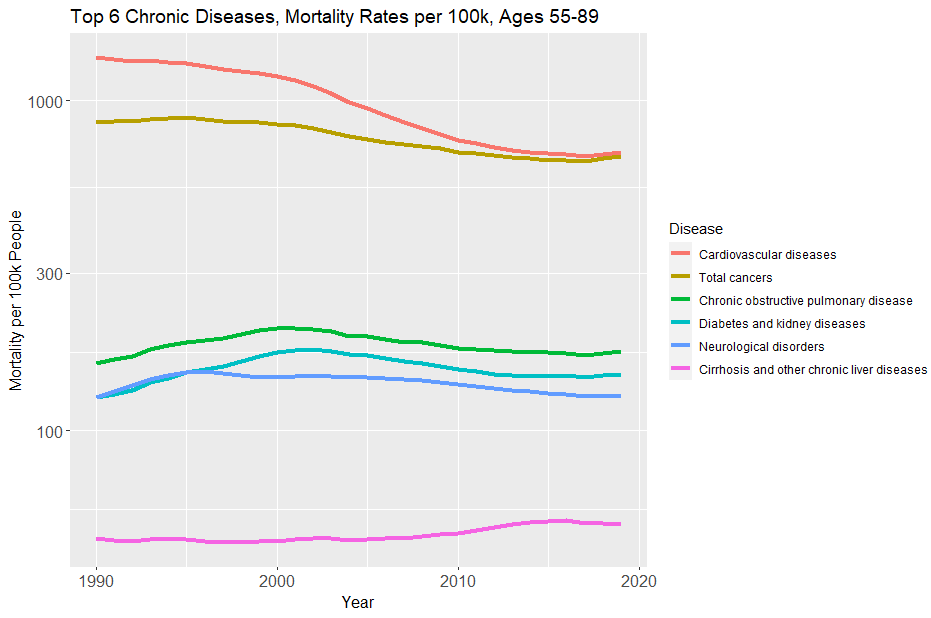

But in terms of overall (age-standardized) mortality across all cancers, the picture is mixed.

In the below graph, we see this (note the log-10-transformed y-axis):

Mortality from the biggest killer of the cancers, lung cancer, has fallen due to smoking cessation (the same goes for stomach cancer). The mortality rate for the second biggest killer among women, breast cancer, fell by ⅓ from 1990 to 2019—a good portion of this is attributable to improved therapeutics. Mortality from prostate cancer in men, and colorectal cancer in both sexes, have all also fallen around 30-40%, much of which is attributable to screening.

Yet mortality from pancreatic cancer hasn’t moved. Late-stage breast cancer is still a death sentence. The incidence (and mortality) of liver cancer has actually increased among both sexes, due to increased obesity. And plenty of the cancers we’ve lowered the incidence of through public health measures—esophageal, lung, stomach—still have incredibly low survival rates.

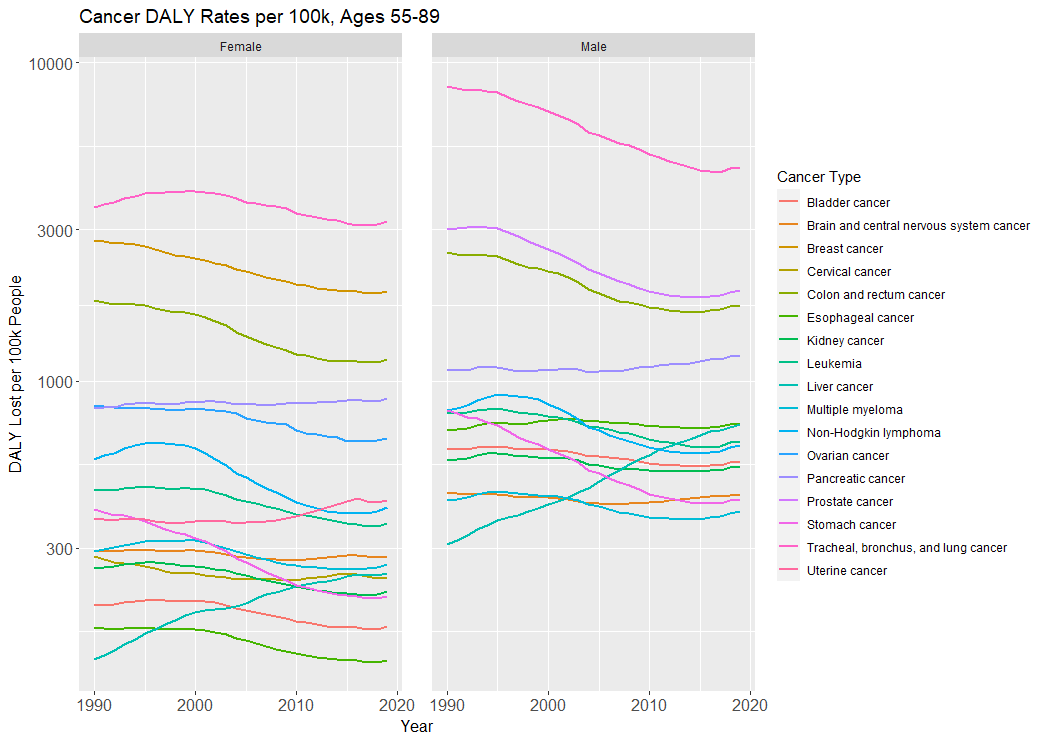

The declines in disability-adjusted life years (DALYs) lost mirror the declines in overall mortality (note again the log-scale):

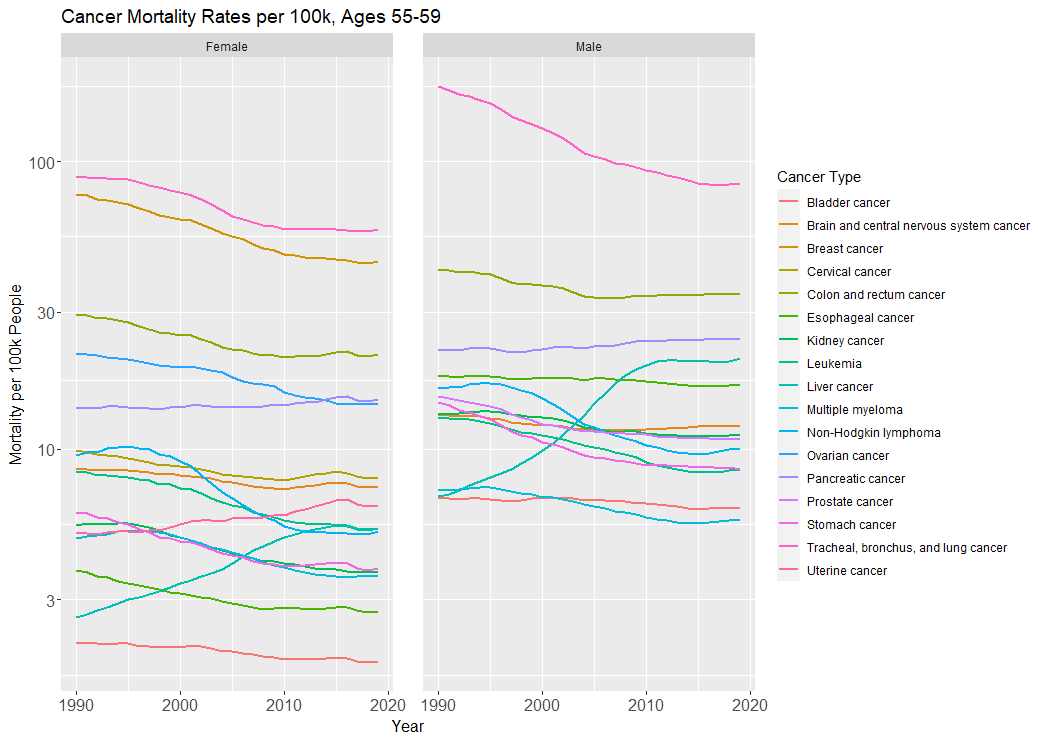

And if you’re wondering if it looks any different for adults ages 55 to 59, perhaps because of an age composition effect, it does not. The declines are all roughly the same compared to the 55 to 89 group (that is, 30-40% declines for the major cancers like breast, prostate, colorectal, ovarian, etc.).

But one needn’t appeal to mortality or DALY rates to show things are still stagnant. Just look at how shockingly primitive our cancer care still is: we routinely lop off body parts and pump people full of heavy metals or other cytotoxic agents (most of which were invented in the 20th century), the survival benefits of which are often measured in months, not years.

The optimist might push back again: yes, the needle hasn’t moved much for the toughest cancers, but novel modalities like CAR-T are already having a huge impact on hematological malignancies, and they’ll someday cure solid tumors. Clearly biomedical stagnation is in the process of ending. Give it a bit of time.

To which the skeptic replies: Yes, CAR-T has shown some great results in some specific blood cancers, and there’s a lot to be optimistic about. But let’s not get ahead of ourselves. When one critically examines the methodology of many CAR-T clinical trials, the survival and quality of life benefits aren’t as impressive as its proponents would lead you to believe (as is the case with many oncology drugs). We’re likely at least a decade away from CAR-T meaningfully altering annual mortality of any sort of solid tumor.

Thus, it seems a bit premature to say biomedical stagnation in cancer has ended, based purely off some recent promising clinical trials—especially when we’ve been repeatedly sold this story before.

The war is still being fought, 50 years on. Likewise for the other five major chronic diseases, where the picture is often even bleaker.

We wanted a cure for cancer. Instead we got genetic ancestry reports.

Real Biomedical Research Has Never Been Tried

The optimist will backpedal and grant that biomedical stagnation hasn’t yet ended for chronic diseases (infectious diseases and monogenic disorders are another question). But despite biomedical progress being repeatedly oversold and under-delivered on, and despite us putting ever-more resources into it, they think this time is different. Yes, Francis Collins said the same thing 20 years ago, but forget the reference class: this time really is different. Those were false starts; this is the real deal. The end of biomedical stagnation is imminent.

There’s a simple reason for believing this: our tools for interrogating biological systems are on exponential improvement curves, and they are already generating unprecedented insight. Due to the accretive nature of scientific knowledge, it’s only a matter of time before we completely understand these biological systems and cure the diseases that ail them.

To which the skeptic might say: but didn’t the optimists make that exact same argument 10 or 20 years ago? What’s changed? Eroom’s law hasn’t: all the putatively important upstream factors (combinatorial chemistry, DNA sequencing, high-throughput screening, etc.) continue to become better, faster, and cheaper, and our understanding of disease mechanisms and drug targets has only grown, yet the number of drugs approved per R&D dollar has halved every 9 years for the past six or seven decades—with this trend only recently plateauing (not reversing) due to a friendlier FDA, and drugs targeting rare diseases, finer disease subtypes, and genetically validated targets.

Likewise for returns per publication and clinical trial. (And, by the way, more than half of major preclinical cancer papers fail to replicate.)

To which the optimist says: we’ve been hamstrung by regulation and only recently developed the experimental tools necessary to do real biomedical research. But now that these tools have arrived, they will change the game. We might even dare to say these tools, combined with advances in software, enable a qualitatively different type of experimental biomedical research. Biomedicine will become a “data-driven discipline”:

…exponential progress in DNA-based technologies—primarily Sequencing and Synthesis in combination with molecular tools like CRISPR—are totally changing experimental biology. In order to grapple with the new Scale that is possible, Software is essential. This transition to being a data-driven discipline has served as an additional accelerant—we can now leverage the incredible progress made in the digital revolution [emphasis not added].

Just wait—now that we have the right biological research tools, the end of biomedical stagnation is imminent.

The Mechanistic Mind’s Translational Conceit

But the optimist is begging the question. They assume progress in biological research will naturally lead to progress in biomedical outcomes, but we’ve repeatedly seen this isn’t the case: our biological research has (apparently) advanced tremendously over the past twenty years, yet this hasn’t translated into similarly tremendous medical results, despite all predictions to the contrary.

Yet the mechanistic mind is confident this will change. This is the mechanistic mind’s translational conceit: that accumulating experimental knowledge and building ever-more reductionistic models of biology will eventually lead to cures for disease. Once we carve nature at the joints (i.e., discover the ground-truth mechanistic structure of biological systems, expressible in human-legible terms), the task of translation will become easy. Through understanding nature, we will learn how to control it.

And if we look back in ten years and the mortality indicators haven’t budged much, then it simply means we’re ten years closer to a cure. This is a marathon, not a sprint. Stay the course: run more experiments, collect more data, and continue carving. The diseases will yield eventually. We must leave no nucleotide unsequenced, no protein un-spectrometered…

At a Crossroads

The mechanistic mind does not have a concrete model of biomedical progress. Rather, they have unwavering faith that more biomedical research leads to more translational progress. Their optimism is indeterminate. It is why they are repeatedly disappointed when amazing research discoveries fail to translate into cures but nonetheless maintain faith that more research is the answer—they can’t tell you when the cures will arrive, but at least they know they’re pushing in the right direction.

The mechanistic mind has certainly done a lot for us—there’s no denying that. But it will not deliver on the biomedical progress it has promised in a timely fashion.

However, there’s no need for despair: this time is, in fact, different. Progress in tools has created the potential for a radically different research ethos that will end biomedical stagnation. But to understand this new research ethos, we must first understand the telos of the mechanistic mind and why it is at odds with the biomedical problem setting.

3. The Spectrum of Biomedical Research Ethoses

Let’s return to our original formulation of the biomedical problem setting:

The purpose of biomedicine is to control the state of biological systems toward salutary ends.

This definition is rather broad. It doesn’t specify how we must go about learning to control biological systems. Let’s reframe the problem to make it more tractable.

The Dynamics Reframing of Biomedicine

We can first recast this problem as a search problem: given a starting state, s0, and a desired end state, s1, the task is to find the intervention that moves the system from s0 to s1. For instance, a patient is in a diseased state, and you must find the therapeutic intervention that moves them into a healthy state.

However, the space of interventions is large and biology is complex, so brute-force search won’t work. Therefore, we can further recast this search problem as the problem of learning an action-conditional model of biology—i.e., a dynamics model, to use the language of model-based reinforcement learning—to guide this search through intervention space. A dynamics model takes in an input state (or, in the non-Markovian case, multiple past states) and an action, and predicts the resulting state, f:S×A→S′.

The predictive performance of the dynamics model directly determines the efficiency of the search through intervention space. That is, the better your model predicts the behavior of a biological system, the easier it will be to learn to control it.

Thus, the biomedical control problem reduces to a search problem over intervention space, which itself reduces to the problem of learning a dynamics model to guide this search.

Learning this dynamics model is the task of biomedical research.

However, the mechanistic mind smuggles in a set of assumptions about what form this model must take:

This ethos aims to control biological systems by building mechanistic models of them that are explainable and understandable by humans (i.e., human-legible).

By interrogating this set of assumptions, we will see why the mechanistic mind is the wrong research ethos to approach the biomedical dynamics problem from.

Telos of The Mechanistic Mind

…In that Empire, the Art of Cartography attained such Perfection that the map of a single Province occupied the entirety of a City, and the map of the Empire, the entirety of a Province. In time, those Unconscionable Maps no longer satisfied, and the Cartographers Guilds struck a Map of the Empire whose size was that of the Empire, and which coincided point for point with it. The following Generations, who were not so fond of the Study of Cartography as their Forebears had been, saw that that vast Map was Useless, and not without some Pitilessness was it, that they delivered it up to the Inclemencies of Sun and Winters. In the Deserts of the West, still today, there are Tattered Ruins of that Map, inhabited by Animals and Beggars; in all the Land there is no other Relic of the Disciplines of Geography. — Jorge Luis Borges, On Exactitude in Science

A unifying telos, invariant across phenomena of study and eras of tooling, underlies the mechanistic mind.

This telos is building a 1-to-1 map of the biological territory—reducing a biological system to a perfectly legible molecular (or perhaps even sub-molecular) diagram of the interactions of its constituent parts. This is how the mechanistic mind intends to carve nature at the joints; it will unify through dissolution.

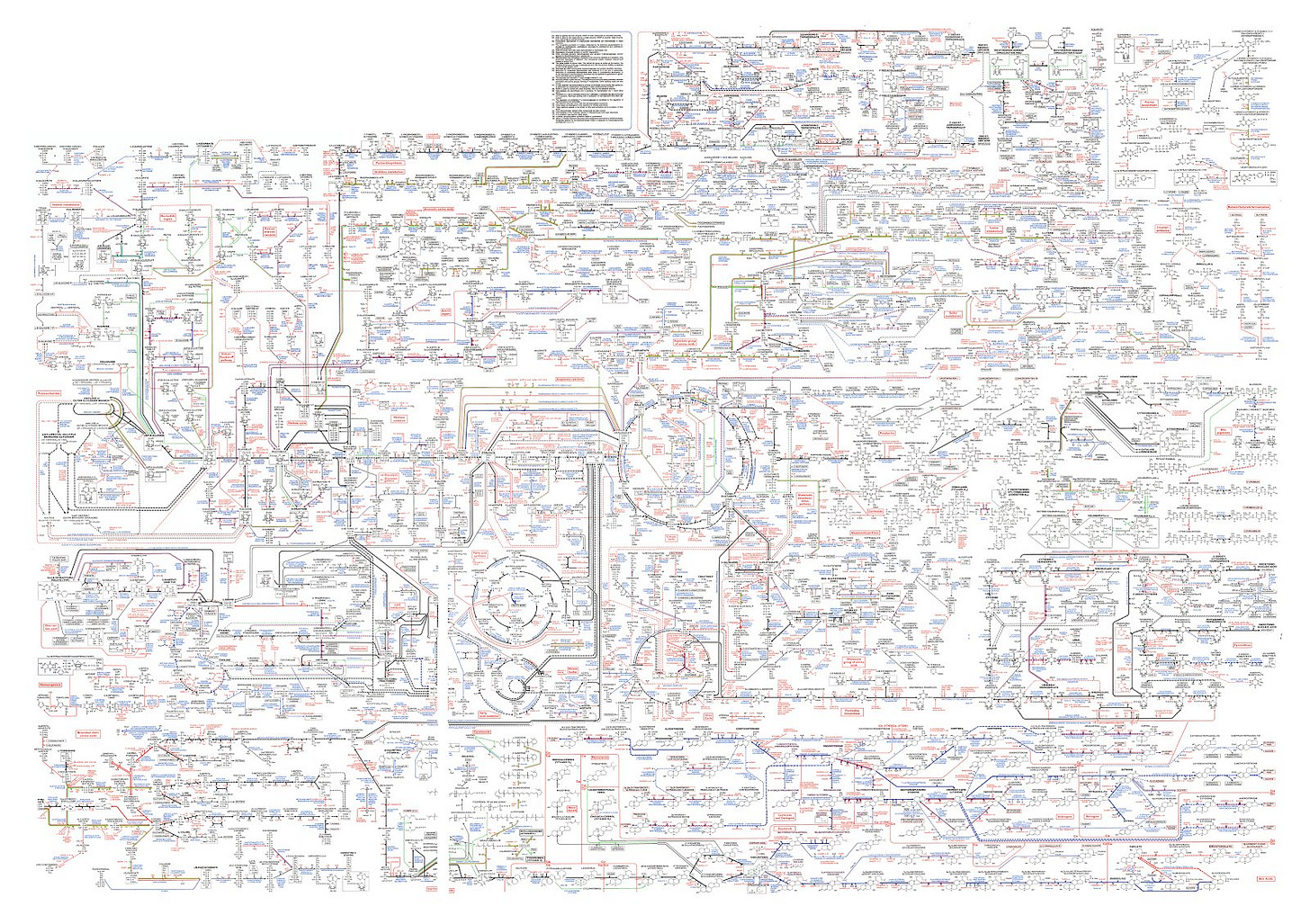

This isn’t hypothetical: a simplified version of such a map of biochemical pathways was created over 50 years ago for the major metabolic pathways and major cellular and molecular processes.

Those two maps are quite large and complex, but they are massively simplified and pre-genomic. Our current maps are far larger and more sophisticated.

These maps take the form of ontologies. For instance, Gene Ontology is “the network of biological classes describing the current best representation of the ‘universe’ of biology: the molecular functions, cellular locations, and processes gene products may carry out.”

Concretely, GO is a massive directed graph of biological classes (molecular functions, cellular components, and biological processes) and relations (“is a”, “part of”, “has part”, “regulates”) between them.

But simple ontologies are just the beginning. One can build extremely complex relational logic atop them, like qualifiers for relational annotations between genes and processes:

A gene product is associated with a GO Molecular Function term using the qualifier ‘contributes_to’ when it is a member of a complex that is defined as an “irreducible molecular machine” - where a particular Molecular Function cannot be ascribed to an individual subunit or small set of subunits of a complex. Note that the ‘contributes_to’ qualifier is specific to Molecular Functions.

But single annotations, even with qualifiers, are simply not expressive enough to encode the complexity of biology. Luckily, there’s an ontology of relations one can draw on to compose convoluted relations, which can be used to model larger, more complex biological systems:

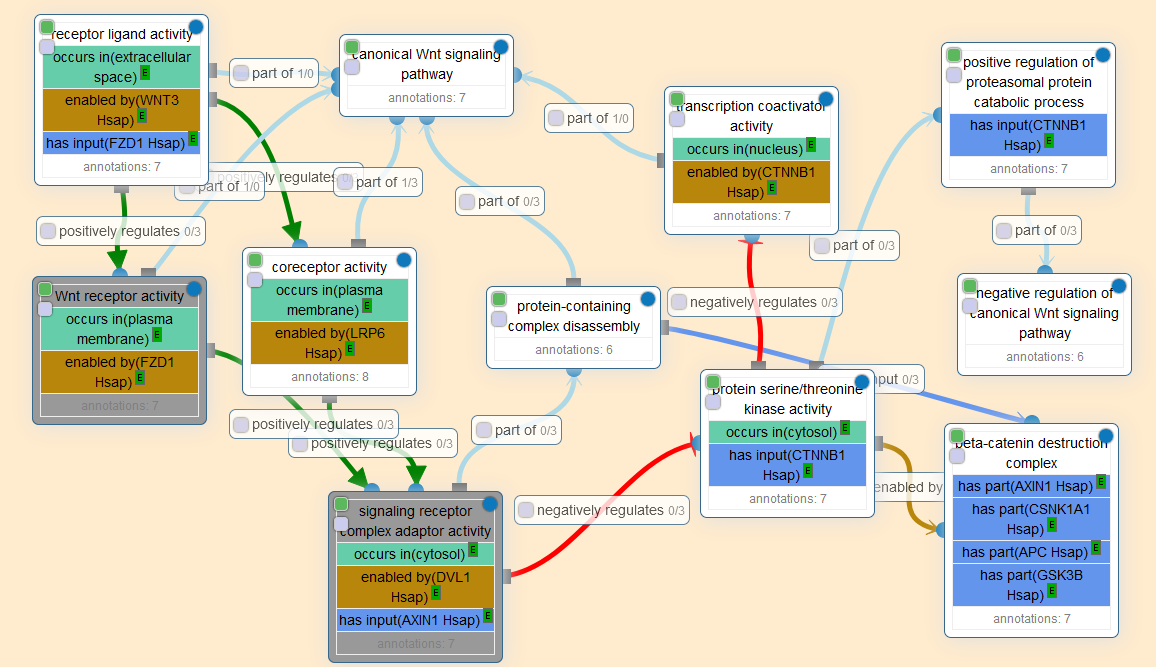

GO-Causal Activity Models (GO-CAMs) use a defined “grammar” for linking multiple standard GO annotations into larger models of biological function (such as “pathways”) in a semantically structured manner. Minimally, a GO-CAM model must connect at least two standard GO annotations (GO-CAM example).

The primary unit of biological modeling in GO-CAM is a molecular activity, e.g. protein kinase activity, of a specific gene product or complex. A molecular activity is an activity carried out at the molecular level by a gene product; this is specified by a term from the GO MF ontology. GO-CAM models are thus connections of GO MF annotations enriched by providing the appropriate context in which that function occurs. All connections in a GO-CAM model, e.g. between a gene product and activity, two activities, or an activity and additional contextual information, are made using clearly defined semantic relations from the Relations Ontology.

For instance, here’s a graph visualization of the GO-CAM for the beginning of the WNT signaling pathway:

Conceivably, one could use these causal activity models to encode all observational and experimental knowledge about biological systems, including the immense amounts of genome-wide, single-nucleotide-resolution screening data currently being generated. The potential applications are immense:

The causal networks in GO-CAM models will also enable entirely new applications, such as network-based analysis of genomic data and logical modeling of biological systems. In addition, the models may also prove useful for pathway visualization…With GO-CAM, the massive knowledge base of GO annotations collected over the past 20 years can be used as the basis not only for a genomic-biology representation of gene function but also for a more expansive systems-biology representation and its emerging applications to the interpretation of large-scale experimental data.

The benefit of this sort of model is that it is extremely legible: the ontologies and relations are crystal clear, and every annotation points to the piece of scientific evidence it is based on. It is ordered, clean, and systematic.

And, in effect, this knowledge graph is what most modern biomedical research is working toward. Even if publications aren’t literally added to an external knowledge graph, researchers use the same set of conceptual tools when designing and analyzing their experiments—relations like upregulation, sufficiency and necessity; classes like biological processes and molecular entities—the stuff of the mechanistic mind. Ontologies and causal models are merely an externalization of the collective knowledge graph implicit in publications and the heads of researchers.

And yet, what does this knowledge graph get us in terms of dynamics and control?

Suppose we were given the ground-truth, molecule-level mechanistic map of some biological system, like a cell, in the form of a directed graph. For instance, imagine this map as a massive, high-resolution gene regulatory network describing the inner-workings of the cell.

It’s not immediately clear how we’d use this exact map to control the cell’s behavior, let alone the behavior of larger systems (e.g., a tissue composed of multiple cells).

One idea is to take the network and model the molecular kinetics as a system of differential equations, and use this to simulate the cell at the molecular level. This has already been tried for a minimal bacterial cell:

We present a whole-cell fully dynamical kinetic model (WCM) of JCVI-syn3A, a minimal cell with a reduced genome of 493 genes that has retained few regulatory proteins or small RNAs… Time-dependent behaviors of concentrations and reaction fluxes from stochastic-deterministic simulations over a cell cycle reveal how the cell balances demands of its metabolism, genetic information processes, and growth, and offer insight into the principles of life for this minimal cell.

In theory, once you’ve built a spatially resolved, molecule-level simulation of the minimal cell, you then move up to a full-fledged cell, then multiple cells, and so on. Eventually you’ll arrive at a perfect simulation of any biological system, which you can do planning over.

The most significant problem you face, obviously, is computational limitations. Therefore, it seems a perfect map of the biological territory wouldn’t make for a good dynamics model.

However, intuitively, it appears that an exact map should give us the ability to predict the dynamics of the cell and, as a result, control it. Even if simulation isn’t possible, shouldn’t understanding the system at a fine-grain level necessarily give us coarser-grain maps that can be used to predict and control the system’s dynamics at a higher level?

Unfortunately, the answer is no. The issue, it turns out, is that the mechanistic mind’s demand for dynamics model legibility led the model to capture the biological system’s dynamics at the wrong level of analysis using the wrong conceptual primitives.

This is the fundamental flaw in the mechanistic mind’s translational conceit: mistaking advances in mechanistic, human-legible knowledge of smaller and smaller parts of biological systems for advances in predicting the behavior of (and, consequently, controlling) the whole biological systems those parts comprise.

But we needn’t resign ourselves to biomedical stagnation; there are alternative forms of dynamics models which are more suitable for control. Understanding them will require a brief foray into the history of machine learning.

Three Stories from the History of Machine Learning

By tracing the development of machine learning methods applied to three problem domains—language generation and understanding, game-playing agents, and autonomous vehicles—we will develop an intuition for what direction biomedical dynamics models must head in to end biomedical stagnation.

The Bitter Lesson

To make a long story short, these three AI problem domains, and countless others, have undergone a similar evolution, what reinforcement learning pioneer Richard Sutton calls the “bitter lesson” of AI research:

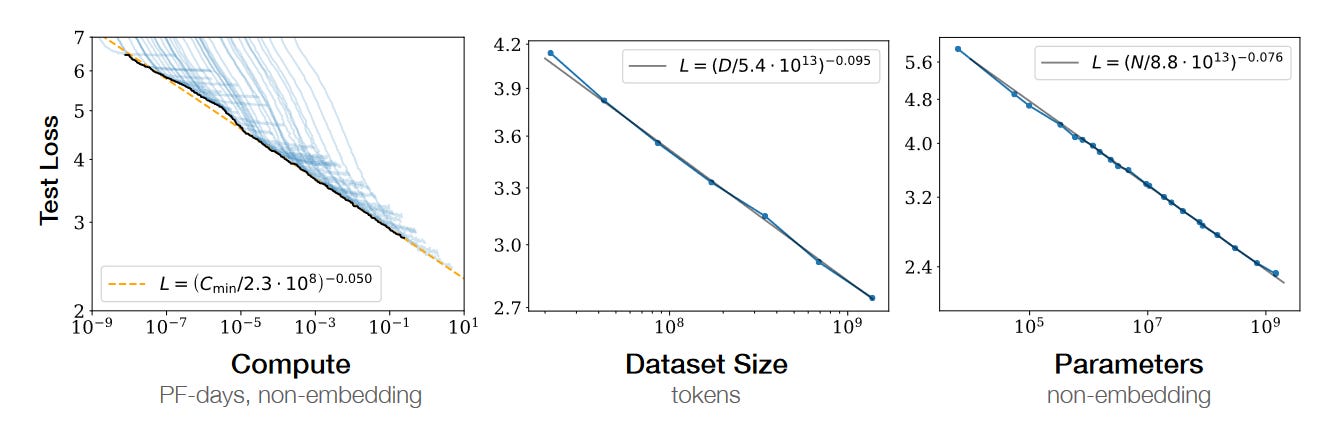

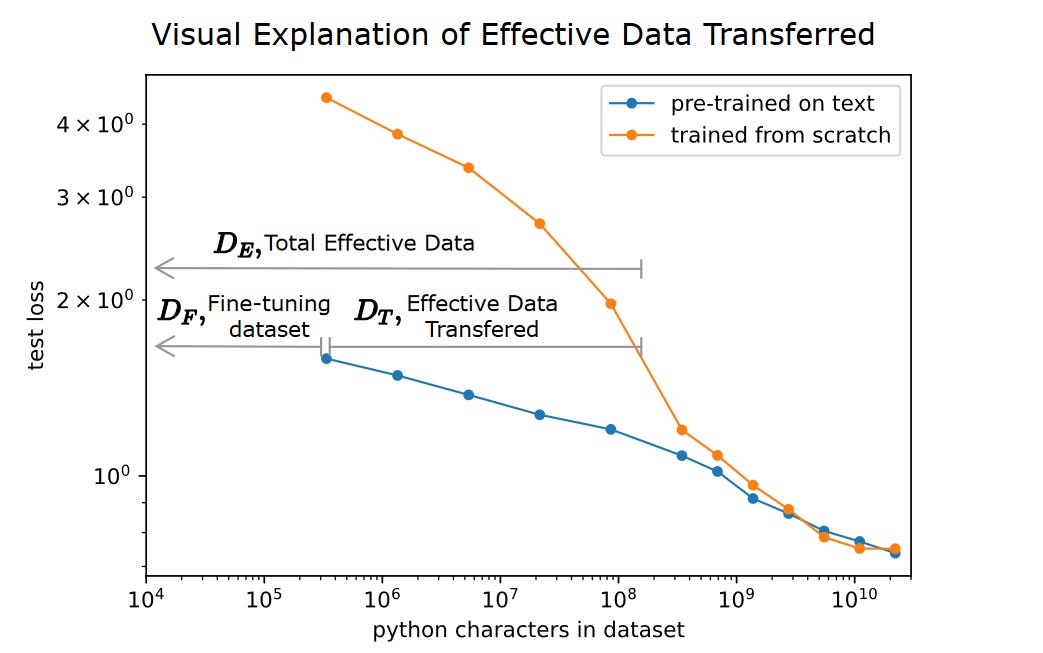

The biggest lesson that can be read from 70 years of AI research is that general methods that leverage computation are ultimately the most effective, and by a large margin. The ultimate reason for this is Moore’s law, or rather its generalization of continued exponentially falling cost per unit of computation. Most AI research has been conducted as if the computation available to the agent were constant (in which case leveraging human knowledge would be one of the only ways to improve performance) but, over a slightly longer time than a typical research project, massively more computation inevitably becomes available. Seeking an improvement that makes a difference in the shorter term, researchers seek to leverage their human knowledge of the domain, but the only thing that matters in the long run is the leveraging of computation. These two need not run counter to each other, but in practice they tend to. Time spent on one is time not spent on the other. There are psychological commitments to investment in one approach or the other. And the human-knowledge approach tends to complicate methods in ways that make them less suited to taking advantage of general methods leveraging computation…

The bitter lesson is based on the historical observations that 1) AI researchers have often tried to build knowledge into their agents, 2) this always helps in the short term, and is personally satisfying to the researcher, but 3) in the long run it plateaus and even inhibits further progress, and 4) breakthrough progress eventually arrives by an opposing approach based on scaling computation by search and learning. The eventual success is tinged with bitterness, and often incompletely digested, because it is success over a favored, human-centric approach.

That is, in the long run (i.e., in the data and compute limit), general machine learning methods invariably outperform their feature-engineered counterparts—whether those “features” are the data features, the model architecture, the loss function, or the task formulation, all of which are types of inductive biases that place a prior on the problem’s solution space.

General methods win out because they meet the territory on its own terms:

The second general point to be learned from the bitter lesson is that the actual contents of minds are tremendously, irredeemably complex; we should stop trying to find simple ways to think about the contents of minds, such as simple ways to think about space, objects, multiple agents, or symmetries. All these are part of the arbitrary, intrinsically-complex, outside world. They are not what should be built in, as their complexity is endless; instead we should build in only the meta-methods that can find and capture this arbitrary complexity. Essential to these methods is that they can find good approximations, but the search for them should be by our methods, not by us. We want AI agents that can discover like we can, not which contain what we have discovered. Building in our discoveries only makes it harder to see how the discovering process can be done.

As Sutton notes, this lesson is a hard one to digest, and it’s probably not immediately apparent how it applies to biomedicine. So, let’s briefly examine three example cases of the bitter lesson that can act as useful analogs for the biomedical problem domain. In particular, I hope to highlight cases where machine learning methods superficially take the bitter lesson to heart, only to be superseded by methods that more fully digest it.

Language Understanding, Generation, and Reasoning

Cyc has never failed to be able to fully capture a definition, equation, rule, or rule of thumb that a domain expert was able to articulate and communicate in English. — Parisi and Hart, 2020

ML can never give an explicit step-by-step explanation of its line of reasoning behind a conclusion, but Cyc always can. — Cyc white paper, 2021

Here’s an abbreviated history of how AI for language progressed over the past 50 years:

Neural networks experienced a brief moment of hope in the early 60’s, but this was quickly dashed. Neural networks became unfashionable.

From then onward, the dominant approach to language-based reasoning became symbolic AI, which is modeled after “how we think we think”, to use Sutton’s phrase. This approach was realized in, for instance, the expert systems which became popular in the 70’s and 80’s—and it is still being pursued by projects like Cyc.

In the 90’s and 00’s, simple statistical methods like Naive Bayes and random forest began to be applied to language sub-tasks like semantic role labeling and word sense disambiguation; symbolic logic still dominated all complex reasoning tasks. Neural networks were outside the mainstream, but a small group of researchers continued working on them, making important breakthroughs.

But by the early 2010’s, neural networks started to take off, demonstrating state of the art performance on everything from image recognition to language translation (even though in some cases the solutions had been around for more than twenty years, waiting for the right hardware and data to come along). However, these neural networks still used specialized architectures, training procedures, and datasets for particular tasks.

Finally, in the past five years, Sutton’s bitter lesson has been realized: a single, general-purpose neural network architecture, fed massive amounts of messy, varied data, trained using very simple loss functions and lots of compute, has come to dominate not only all language tasks, but also domains like vision and speech—part of the “ongoing consolidation in AI”:

You can feed it sequences of words. Or sequences of image patches. Or sequences of speech pieces. Or sequences of (state, action, reward) in reinforcement learning. You can throw in arbitrary other tokens into the conditioning set—an extremely simple/flexible modeling framework.

The neural network naysayers were proven wrong and the believers vindicated: in 2022, massive language models are resolving Winograd schemas at near-human levels and explaining jokes—and on many tasks surpassing average human performance. And yet, they’re only able to do this because we do not hard-code our understanding of semantic ambiguity or humor into the model, nor do we directly ask the model to produce such behaviors.

Game-Playing Agents

The evolution of AI for game-playing agents parallels that of AI for language in many ways. To take chess as an example, we can divide it into three eras: the DeepBlue and Stockfish era, the AlphaZero era, and the MuZero era.

Both DeepBlue and Stockfish use massive brute-force search algorithms with hand-crafted evaluation functions (i.e., the function used during search to evaluate the strength of a position) based on expert knowledge, and a host of chess-specific heuristics. Chess researchers were understandably upset when these “inelegant” methods began to defeat humans. As Sutton tells it:

In computer chess, the methods that defeated the world champion, Kasparov, in 1997, were based on massive, deep search. At the time, this was looked upon with dismay by the majority of computer-chess researchers who had pursued methods that leveraged human understanding of the special structure of chess. When a simpler, search-based approach with special hardware and software proved vastly more effective, these human-knowledge-based chess researchers were not good losers. They said that “brute force” search may have won this time, but it was not a general strategy, and anyway it was not how people played chess. These researchers wanted methods based on human input to win and were disappointed when they did not.

Whereas AlphaZero dispenses with all the chess-specific expert knowledge and instead uses a neural network to learn a value function atop a more general search algorithm. Furthermore, it learns this all by training purely through self-play (that is, by playing with itself—it isn’t given a bank of past chess games to train on, only the rules of the game). It is so general an architecture that it can learn to play not only chess, but also Go and Shogi.

AlphaZero not only handily beats Stockfish, but does so using less inference-time compute, making it arguably more human-like in its play than its “brute-force” predecessors:

AlphaZero searches just 60,000 positions per second in chess and shogi, compared with 60 million for Stockfish…AlphaZero may compensate for the lower number of evaluations by using its deep neural network to focus much more selectively on the most promising variations—arguably a more human-like approach to searching, as originally proposed by Shannon.

Finally, MuZero takes AlphaZero and makes it even more general: it learns to play any game without being given the rules. Instead, MuZero bootstraps a latent dynamics model of the environment through interacting with it, and uses this learned dynamics model for planning. This latent planning allows it to learn tasks, like Atari games, which were previously not seen as the province of model-based reinforcement learning because of their high state-space dimensionality. In fact, MuZero is so general a learning method that it can be applied to non-game tasks like video compression. If you can give it a reward signal, MuZero will learn it.

Autonomous Vehicles

Autonomous vehicles (AV) are by far the most instructive of the analogs, because the battle is still being played out in real time and many people are mistaken about which approach will win. Additionally, the psychological barriers to arriving at the correct approach (namely, an inability to abstract) are similar to the psychological barriers end-to-end machine learning approaches in biomedicine will face.

We can divide modern AV approaches into two basic camps: the feature-engineering camp of Waymo et al., and the end-to-end approach of Comma.ai and Wayve (and, to a lesser extent, Tesla). Both camps use “machine learning”, but their mindsets are radically different.

The classical approach to AV—pursued by Waymo, Cruise, Zoox, et al. (all the big players in AV that you’ve likely heard of)—decomposes the task of driving into a set of human-understandable sub-problems: perception, planning, and control. By engineering human knowledge into the perception stack, this approach hopes to simplify planning and control.

These companies use fleets of vehicles, decked out with expensive sensors, to collect massive amounts of high-resolution data, which they use to build high-definition maps of new environments: