Andrew is a data scientist who has published on what language models can tell us about personality structure. He writes about machine learning, personality, and the evolution of consciousness at VectorsOfMind.com and works at a startup making satellite images more searchable.

“When the woman saw that the fruit of the tree was good for food and pleasing to the eye, and also desirable for gaining wisdom, she took some and ate it. She also gave some to her husband, who was with her, and he ate it. Then the eyes of both of them were opened, and they realized they were naked; so they sewed fig leaves together and made coverings for themselves.” Genesis 3:6-7

By some accounts, Homo Sapiens evolved 200,000 years ago. However, there is little evidence of sapient behavior for most of that time period. Stone tools remained the same for tens of thousands of years, meaning countless generations lived and died without any innovation. There was no art and likely no storytelling. About 50,000 years ago, a distinctly human culture emerged. The tools became more complex. Styles and methods of production changed over the course of hundreds of years rather than tens of thousands. Art, religion, and calendars were invented. From then on, change has been the only constant. Many scientists think language evolved around this time. It looks like the dawn of inner life.

Much of the disagreement on when we became human is disagreement about what makes us human. Ironically, one of our clearest quirks is the need for narrative, especially for who we are and where we came from. All cultures answer these questions. The scandal of Darwinian evolution was contravening religion to explain them by material causes—natural selection degree by degree. Instead of being created in the image of God, man and beast henceforth belonged to the same family tree. We undoubtedly share a common ancestor with other animals. But this elides the deeper question of what makes us human. We may share 99% of our DNA with chimps, but on the meaningful traits humans are categorically different. We have language and symbolic thought. We are native dualists who feel that, at the core, we are made of spiritual stuff. A dog has never had an existential crisis.1 More than unique, these attributes seem to be binary; an organism either has them or doesn’t. How did we evolve these defining features bit by bit? Were there times when humans were only half capable of symbolic thought? What would that even look like? This is one of the great open questions of science.

The tension between anatomical modernity 200 kya (thousand years ago) and Behavioral Modernity 50 kya permeates the study of human origins. See, for example, Michael Corballis’s The Recursive Mind: The Origins of Human Language, Thought, and Civilization. He makes the case that recursive thinking undergirds self-awareness, language, counting, and imagining the future. The whole human package tightly wound up by a single principle. But the timeline is confusing. He’s “certain” that a Homo Sapien from 200 kya raised today could become a lawyer, artist, or scientist without any problem. He claims there are no cognitive differences on these time scales but later spends several paragraphs detailing how, starting 40 kya, there was a flowering of culture that sure looks like the emergence of recursion, and maybe it evolved then. In some ways, it’s an optimistic ideology. “Yes, there are no signs of sapience 200-40 kya, but if an early Homo Sapien were raised in the modern school system, they’d be completely normal. Evolution can’t touch the brain in a mere 200,000 years.” But the flip side of that coin denigrates the human spark. It implies that in the Stone Age, things we consider fundamental simply didn’t develop. The impulse to make art and search for meaning was not always found in our species. Rather, it’s a tic of whatever environments humans have been living in over the last 50,000 years. If everyone else stopped asking existential questions—or even doodling—you would, too. Or at least a child would if raised in that barren world. Not only is it a bleak vision of human nature, but if language had evolved by 200 kya, then understanding that moment is hopeless. Time gobbles up evidence. However, if it started to emerge 50 kya, we may be able to reconstruct the story. The finishing touches of linguistic thinking could have been evolving fairly recently.

I choose to frame human origins in terms of a soul. This essay’s subtitle could be “How humans evolved an irreducible self-referential ‘I’,” but that would divorce my project from thousands of years of thinkers and the grounding power of natural language. The meaning of “soul” is an agreement between millions of people about the essence of a self, the seat of agency, and connection to the divine. When grappling with what it means to be human, common language offers a guardrail.2 And in this case, a target for what must be explained. Where did souls come from?

Given that and the reference to Eve, I should clarify the relationship to Christianity. I think that Genesis and many other creation myths are remarkably good phenomenological accounts of the first man to think, “I am.” Based on the archeological and genetic data, this may have happened about 50 kya. Comparative mythologists tell us some stories have lasted that long, and if any story would be preserved for millennia, it would be our genesis.

My thesis is that women discovered “I” first and then taught men about inner life. Creation myths are memories of when women forged humans into a dualistic species. That sounds fantastic, but we have to have evolved at some point (and it must have been fantastic). Further, weaker versions of the idea are still interesting. For example, I hold that snake venom was used in the first rituals to help communicate “I am.” Hence the snake in the garden, tempting Eve with self-knowledge. Even if those rituals do not figure in human evolution or our discovery of consciousness, it would be extraordinary if a psychedelic snake cult from the Paleolithic is remembered in Genesis as well as by the Aztecs. I’d watch that Netflix series!

In several blog posts, I have developed what it would look like to discover “I, ” how it could have been communicated to others, why women would have been the vanguard, how it could evolve by degrees, and what sort of cultural and genetic marks such a process would leave. But these arguments are scattered across posts, and in the meantime, I’ve found more supporting evidence. For example, earlier, I mused that snake venom could have been used as a hallucinogen. It turns out that this is well documented in ritual settings, including among the architects of Western civilization.

This essay starts with a discussion of what it means to be human. This is essential to establish a common framework but, unfortunately, buries the lede. The most original research is on the worldwide Snake Cult. If you’re short on time, you could start there. Or, if you prefer audio, there is a narration available by Askwho Casts AI. (And if you enjoy it, consider buying them a coffee on Patreon.)

One last bit of business. Rationalists often start an essay by signaling how seriously one should take the argument. Epistemic status: Human origins are inherently speculative, and this is a passion project outside my area of expertise (psychology and AI). For this post, I’ve read maybe a dozen books and 100 papers. One way to think about this theory is interpreting data by asking, “How recently could humans have become sapient?” rather than using the institutional bias that seeks to push that date back as far as possible.3 So, proceed with caution, but that’s par for the course for this subject.

Outline

What makes us human? Recursive self-awareness.

Weak EToC, without any reference to mythology. Recursive culture spread, and this caused genetic selection for recursive abilities wherever it went.

The Snake Cult of Consciousness: giving the Stoned Ape Theory fangs.

What makes us human?

“In the beginning, there was only the Great Self in the form of a Person. Reflecting, it found nothing but itself. Then its first word was: “This am I!” whence arose the name “I” (Aham).” Brihadaranyaka Upanishad 1.4.1

“I” is the beginning of many creation myths. This is made explicit in the Hindu verse quoted above. Or consider the Egyptian account where Atum rises out of the primordial ocean of chaos by saying his name. There are echoes in the Bible as well. After eating the Fruit of Knowledge, Adam and Eve became self-aware—self-conscious even—and realized their nakedness. The ability to reflect on oneself then produced alienation. Adam could no longer live in unity with God and nature. He had to leave the Garden.

The New Testament can be read as a development of this idea. John begins his gospel with a nod to Genesis: “In the beginning was the Word, and the Word was with God, and the Word was God.” Word here refers to Christ, the answer to the alienation wrought by The Fall of Adam and Eve. Jesus began his ministry by claiming to be the great “I Am,” one of the Jewish names of God (John 8:56–59). It is a passage that goes over the heads of many English readers but not his Jewish audience, who immediately took up stones to kill him. They understood the claim to be “I Am God, the Self-Existing One, who appeared to Abraham, Isaac, and Jacob.” I hope it’s not an abuse of the transitive property to say, “In the beginning was the I Am.”

These myths teach that living started with “I,” that God is ultimately self-referential, and that this same divine spark exists within man. Beyond “I,” creation myths also cite ritual, language, and technology as what separates man from beast.

In Australia, Aboriginal legend holds that civilizing spirits brought the first people language, ritual, and technology. Thus, Dreamtime ended, and time began. Similarly, the Aztecs teach that before modern humans, there lived a race of man built of wood, lacking soul, speech, calendars, and religion. A great flood wiped out this penultimate race, and humanity only survived by temporarily transforming into fish. Clearly, these myths cannot be taken literally. However, their core has held up remarkably well. When scientists answer what makes humans unique, they point to self-awareness, language, religion, and our relationship to time and technology. Many even suppose that all of these form a tight package that can be explained by “recursive” thought. It follows that, in reality, the whole package would have evolved more or less at once.

The fact that creation myths are phenomenologically accurate doesn’t need explaining. The narrative landscape is competitive, and only the most psychologically true survive, particularly in the crowded space of cosmogony. However, details in the world’s creation myths suggest they share a common root deep in the past. In fact, they seem to hail from about the time humans first started expressing “recursive” behaviors. This opens the possibility that they aren’t accurate by accident or in spite of themselves. They could be memories of the transition to sapience.

Recursion is useful

Natural selection works because traits are passed down from parent to child. If a trait allows a parent to have more children, then that trait will become more common in the population. So, if the ability to digest cow’s milk or think abstract thoughts is useful, these traits will become more prevalent with each generation. Given that, what abilities does recursive thinking enable?

Recursion is a process in which a function or procedure calls itself either directly or indirectly. In other words, it's a method where the solution to a problem depends on solutions to smaller instances of the same problem. This can be as simple as standing between two mirrors and looking at the reflection of the reflection of your reflection. The last reflection depends on those that came before it. The concept is widely used in computer science and mathematics for solving complex problems by breaking them down into simpler, more manageable parts.

In computer science, a recursive function applies itself to its own output. Often, each successive application will be a sub-routine, where the input gets simpler and simpler until reaching some stop condition. If that is too technical, don’t worry. Just know that algorithmically speaking, recursion is a superpower. Consider the fractal below. The most straightforward way to store the image is to enumerate the color of each pixel. But there are lots of pixels, and because there is structure in most images, they can be stored far more efficiently with some tricks. Under the hood, JPEG uses recursion to compress images, without which the algorithm would be orders of magnitude slower.4

One can go one step further for this image because it is generated with a recursive process. Therefore, the image can be losslessly encoded with the few bytes required to write the recursive program that originally produced the image—a few lines of code. Not only that, but one could zoom in to any edge and see the fractal recapitulate itself forever on increasingly finer scales. Recursion is almost alchemical in producing so much from so little. In the words of the legendary computer scientist Niklaus Wirth:

The power of recursion evidently lies in the possibility of defining an infinite set of objects by a finite statement. In the same manner, an infinite number of computations can be described by a finite recursive program, even if this program contains no explicit repetitions.

But humans aren’t computers. How does the brain use recursion? In the 1950s, the linguist Noam Chomsky departed from the blank-slate behaviorists by positing that a Universal Grammar constrained all languages. That is, humans have an instinct for language. Just as spiders weave webs of a certain design, human cognitive hardware comes wired to learn a particular type of grammar. This isn’t grammar in the sense of how to conjugate verbs, which varies by language. Rather, Universal Grammar is a meta-rule that applies to every language due to the design of the brain. In his 2002 article "The Faculty of Language: What Is It, Who Has It, and How Did It Evolve?" co-authored with Marc Hauser and Tecumseh Fitch, Chomsky argued recursion was the key feature of the human language faculty. Every language uses recursion as the basis of its grammar.

As in other domains, linguistic recursion means that sentences may be parsed via self-referential subroutines. For example, the sentence “Watson wrote that Holmes deduced the body was in the shed” can be divided into three parts:

X1 = Watson wrote

X2 = Holmes deduced

X3 = the body was in the shed

What did Watson write? To know, one must first parse X2, which in turn requires parsing X3. There is a recursive hierarchy. The sentence’s meaning completely changes with each additional clause, which can go on indefinitely. We can prepend Jane said that John said that Harold said that… to X1 + X2 + X3 forever. Even though there is a finite set of words, there is no longest grammatical sentence. Recursion pries infinity out of finite building blocks. Not that we go about speaking infinitely long sentences. But in practice, it does greatly increase the complexity of ideas that can be expressed. The Universal Grammar is built on the same rule as fractals.

Astute readers may be reeling at the potential bait and switch. Just because we use recursion to describe all these things doesn’t mean they are the same. And that’s fair. There probably are some differences. But it’s entirely in the mainstream to lump many types of recursion. It is an active area of research to test the degree to which recursion in processing music, language, vision, or motor planning uses the same neural architecture.5 Or consider the work of psychologist and linguist Michael Corballis. Along with language, he adds several other recursive superpowers in his book The Recursive Mind: The Origins of Human Language, Thought, and Civilization. These include the ability to introspect, count, and think about the future. As this is an imagined future, it also implies the ability to create fiction, worlds that do not exist. This is the beginning of art, spiritual life, and the human condition.

So, recursion is useful. With it, humans became culture-dependent beings with a language instinct. But more importantly, for individuals, recursion is the basis of consciousness. That dual purpose (pardon the pun) is important to remember, even though consciousness and evolutionary fitness are often treated separately.

Recursion is essential for consciousness

Introspection requires recursion by definition. If the self perceives itself, that is recursion. So the phrase “I think, therefore I am” is recursive on multiple levels. Recursive grammar connects the two phrases, and the mind is directed at itself.

Descartes reasoned that the reality of anything could be doubted, with one exception. You stretch out your hand and feel a table? Well, some have been known to hallucinate such things. It may not be there. The self is the only thing he could not reason away, for it exists by definition if there is a thinker doing the doubting. "I doubt, therefore I think, therefore I am."

There is an even subtler layer of recursion in that phrase. “I” is recursive at rest, not just when it is perceiving itself. One way to think of this is in terms of abilities. The division between your conscious and subconscious mind is that which you can and can’t introspect, not what you are currently introspecting. Similarly, the limits of “I” can be defined by what can recursively reference itself, not whether it is performing that operation at any moment.

More sophisticated arguments can be made. Douglas Hofstadter’s classic I Am a Strange Loop presents the idea that “I” is a result of the same type of self-referential paradox that Gödel used to “break” mathematics. The paper Consciousness as recursive, spatiotemporal self-location includes a dozen citations connecting recursion to consciousness, as does the Stanford Encyclopedia of Philosophy entry on Higher-Order Theories of Consciousness. Many bright people hold that the core of what we call “living” requires recursion.

We take our relationship to duality and time for granted, but these are both built on the foundation of recursion. It’s worth trying to understand that before moving on.

Disruptions of self are also disruptions of subjective time. Diseases that affect the ego, such as Schizophrenia and Alzheimer’s, also disrupt the experience of time. Anyone can dabble in this arena by taking psychedelics which produce ego-death. Such a trip can be 15 minutes on the clock and feel like decades. A more mundane example is the flow state, which seems to draw out time.

As mentioned earlier, mental time travel—thinking about the future or past—is useful. It’s not an exaggeration to call it time travel, given you are simulating the future, which allows one to flexibly plan for it. This is different from instinctive behavior, like a squirrel burying food for the winter. In fact, humans can use mental time travel to think themselves out of instincts. Humans had followed their prey for eons. Imagine the first hunter-gatherers to settle down. They must have had some idea of the next season and reasoned that they didn’t need to follow the herds because they had planted some crops (or had some other alternative).

Living outside the moment is a new kind of alienation from the material world. Many know Joseph Campbell for the Hero’s Journey, the idea that there is a basic template to which all (most?) stories conform. This has been popularized as a list of actions a hero takes to transcend himself and society and then reintegrate. In Campbell’s last book, he described how all stories blossomed out of recursion:

in the old creation myth from the Bṛhadāraṇyaka Upaniṣad of that primordial Being-of-all-beings who, in the beginning, thought “I” and immediately experienced, first fear, then desire. The desire in that case was not to eat, however, but to become two, and then to procreate. And in this primal constellation of themes—first, of unity, albeit unconscious; then of a consciousness of selfhood and immediate fear of extinction; next, desire, first for another and then for union with that other—we have a set of "elementary ideas,” to use Adolf Bastian’s felicitous term, that has been sounded and inflected, transposed, developed, and sounded again through all the mythologies of mankind through the ages. And as a constant structuring strain underlying the everlasting play of these themes, there is the primal polar tension of a consciousness of duality against an earlier, but lost, knowledge of unity that is pressing still for realization and may indeed break through, under circumstances, in a rapture of self-loss.

Narrative is predicated on self-awareness. This was recognized in antiquity and further developed by the likes of Campbell and Jung in the 20th century. With self-reference, our animal drives became fractal symphonies of yearning and imagination.6 Before “I,” there were no stories, and there was no such thing as “living”.

In psychology and linguistics, it is a dominant view that recursion underlies humans’ most overpowered competitive advantages. In philosophy, it is widely held to be a requirement for consciousness, at least of the sort humans have. The next section tries to weave utility and subjectivity into a unified model.

The Original Spin: some models of the first recursive thought

In The Faculty of Language, Steven Pinker and Ray Jackendoff discuss why recursive thought evolved: “Here the problem is not a paucity of candidates for evolutionary antecedents but a surfeit.” Still, they offer some possibilities: music, social cognition, the visual decomposition of objects into parts, and the formulation of complex action sequences. I’d like to offer one more: that the driving recursive thought was “I am.”

Describing this epiphany brings to mind a group of blind men feeling an elephant, declaring it consists of tree-trunk legs, ivory spears, or flaps of rough skin. Like them, I will give several related models that I hope describe humans’ transit transition to sapience.

The first model draws from Freud, dividing the psyche into id, ego, and superego. The id is basic animal nature to meet physical needs. The first bacteria must have had something like this: navigate towards a comfortable salinity. In humans, this is expanded to food, sex, shelter, and the like. The superego is unique to humans. It is your model of society’s expectations. What others—whether abstracted as “society” or particularly people such as mother or chief—expect. The ego is also unique to humans and mediates between these two often contradictory forces. This implies that it evolved after the superego.

Theory of Mind preceded recursive self-awareness. Before recursion, the superego was made up of models of how others would behave and expect, just as it is now. However, a pre-recursive ego is a different beast: a proto-ego. The proto-ego, too, was a model of mind (in this case, one’s own). As a mediator, it would have been well connected to both the superego and the interoceptive system, keeping track of the body’s needs (an important part of the id). The first “I am” corresponds to the ego becoming self-referential, receiving itself as input. The self finally perceived the self.

In other words, we built a map of our mind, and the map became the territory “I.” Or, as Joscha Bach put it, “We exist inside the story that the brain tells itself.” This suggests a straightforward answer to the ancient question of what language has to do with consciousness. Consciousness requires self-reference, which in turn allows full grammatical language. Both come from recursion. (It’s worth noting words existed before full grammatical language or self-reflection. Adam named the animals while in Eden, after all.)

One shortcoming of this model is that it makes the transition seem like the emergence of a thing, the ego. It’s more rightly understood as discovering a new space or dimension. My favorite analogy is thousands of years old and common to many religions. With recursion, humans evolved a new eye that can perceive symbolic space. A Third Eye, if you will. Just as our eyes allow us to see the electromagnetic spectrum, this new self-referential architecture in our brain allows us to perceive the symbolic realm. The abstract world of art, math, (Platonic) ideals, and the future.

Richard Dawkins said there were two great evolutionary moments. The first was the emergence of DNA, which marked the beginning of biological evolution. The second was the emergence of memes. Just as genes propagate themselves by leaping from body to body via sperm or eggs, memes propagate themselves in the meme pool by leaping from brain to brain. We receive ideas, refine or mutate them, and pass them on. Over the long run, the best ideas win. At this point, humans are wholly dependent on the highly evolved memes distributed over countless human brains (and now books and computers). The memetic universe is our natural habitat to a much greater degree than the material world. This is what it means to “exist inside the story the brain tells itself.” A brain that can produce such an “I” has a privileged view of the memetic universe. We are the only species that can “see” most memes—that is, hold and perceive abstract or symbolic ideas. The first person to think “I am” was, in a very real sense, stumbling into a new dimension. Since then, we have built castles in the sky and on earth as well. Humans reign supreme in the material world because we are the sole natives of the memetic niche.

The final model I present is the one that led me down this rabbit hole to begin with. Many scientists say language has evolved in the last 100,000 years. Why, then, is the inner voice such a core feature of conscious thought? How has language been so fully integrated into thinking if consciousness goes back a hundred million years?

Consider the thought experiment: what did the first inner voice say? In Consequences of Conscience, I reasoned that, given the social nature of our species, the first inner voice may have been a moral injunction like “Share your food!” But the content does not matter so much. It could also have said “Run!” when one of our ancestors’ unconscious noticed that the birds had gone too quiet all of a sudden. The question is, would she have identified with the first inner voice? I think not. Identity is complex and requires recursion. Hallucination does not and is still common. This suggests reframing “When did recursion first evolve?” as “When did humans first identify with their inner voice?”

While writing a piece on inner voices (Consequences of Conscience), I struggled to convey the phenomenological significance of this moment. It struck me that I could do no better than Genesis, which reads a lot like Adam being taught his inner voice is him. Before that understanding, what would the hallucinated voices of the superego have been, if not the gods, doling out advice or commands? It follows that Satan told the truth when he said Eve’s eyes would be opened and she would become as the gods.

That would necessitate Genesis hailing from the beginning of phenomenological time. Taking that idea seriously has all the hallmarks of a wild flight of fancy. But I think mostly by convention. Fundamentally, the question is how long information can be preserved in myth and how long ago humans first demonstrated inner life. Surprisingly, there is strong evidence that myths can survive from about the time humans started doing anything that indicates recursive thoughts. Perhaps unsurprisingly, no scientifically-minded person has tried to put two and two together in a very long time. That is this fool’s errand.

Eve Theory of Consciousness (EToC)

Whether Genesis could be a cultural memory of the discovery of the human condition comes down to two questions:

How long can a myth last?

When did we become human?

If those are about the same length, then Genesis could be a memory of our genesis. Both questions are difficult but not totally intractable. I write about the first question here, giving several examples of global memes that were first evidenced about 30,000 years ago. For statistical reasons, the simplest example is the Seven Sisters. In dozens of cultures from Greece to Australia to North America, the Pleiades star cluster is said to represent Seven Sisters, even though only six stars are visible. The discrepancy is often a plot point in the story: a missing sister. Given this detail, Seven Sisters myths worldwide must share a common root. It’s not a plot that would independently emerge.

The seven stars are painted on cave walls in France 21 kya and Australia in the mid-Holocene, where they are also part of the Dreamtime creation myth. Most researchers interpret this to mean the myth is around 100,000 years old. As I’ll explain later, there is no need to posit anything much more than 30,000 years. In large part because, in relation to question 2, there is no compelling evidence of recursive thinking (including fiction) before Behavioral Modernity 40-50 kya. That transition is debated, which we will return to. But for now, all that needs to be established is that there is considerable overlap between mainstream estimates of 1 and 2. I’ll outline a weak and strong version of how recursive culture could have spread. Starting with the weak, which does not rely on any religious text, and then moving to the strong, which interprets common details of creation myths as meaningful.

Weak EToC

Humans today have a fairly seamless construction of self. There are cracks around the edges, particularly if you do drugs, meditate, or have schizophrenia. But many get through life taking “I” for granted from the time they are about 18 months old. In the beginning, this would not have been the case. Recursive loops are inherently unstable. There are steady configurations, but it’s unlikely our cognitive wiring lept from no recursion to recursion as a load-bearing infinite loop without significant evolution. This would take generations of natural selection for the seamless construction of self at a young age.

It’s instructive to imagine the first person to think, “I am.” Modern humans become self-aware as toddlers, it seems. They can at least use “I” correctly, pass a mirror test, and brain scans no longer look like they’re on an acid trip. My guess is the first sapient person was not a toddler because they aren’t particularly introspective or good with Theory of Mind. In that case, the first sapient person would have been an adult who had lived their life up until that moment in non-reflective unity. Let’s call her Eve. It’s possible that she was either pregnant or going through puberty when she had the realization, as those are periods of great brain reorganization, particularly related to social cognition. At any rate, once “I am” was obtained by adults or adolescents, selection for recursion would mean developing “I” younger and younger. Eventually, this drove the age down to ~18 months.

There also would have been selection for more functional recursion. By this, I don’t mean more intelligent or better at grammar, though that is part of it. The clearest lens is phenomenological. The evolution of a soul opens up the whole spirit world, much of which is haunted. The first humans would have been far more schizophrenic, not knowing exactly where “I” started and other imagined specters began. Hallucinating voices is the best-known symptom, but schizophrenia also includes a loss of sense of agency and a feeling that one’s body (or some part) does not belong to them. Go back far enough, and this would have been the norm. And farther still, there would be no “owner” at all. There is a spectrum of how smoothly recursion runs as the default mode. Modern disruptions like epilepsy or schizophrenia map onto that spectrum but are minor compared to the variation that existed in the past. The first thousand light bulbs were extremely faulty by today’s standards. The same is true of the light of consciousness. As I’ve written before, the intermediate evolutionary period could be called the Valley of Insanity. The longer recursion took to evolve, the more time humans spent as Homo Schizo.

The first thousand people to have thought “I am” may have lost that train of thought and gone on living in unity with the universe. If they had such a concept, “altered states of consciousness” would have referred to duality—ego birth, not ego death. Imagine the first person for whom “I am” stuck for any length of time. What would it be like to explain the situation to the rest of the tribe? Absolute lunacy. Like describing the taste of “salt” to a silicon-based alien who only speaks Spanish. Nobody understood the first Eve, and the evolutionary meat grinder kept churning. Given recursion is devilishly useful, those who tended to have the “I” epiphany may have also tended to be better at other (proto-)recursive tasks, such as social navigation or counting, causing them to have more children. Even a small correlation between “I” and these tasks would be sufficient for a temporary experience of “I” to be more common hundreds of generations after the first epiphany. And there likely was a correlation, given the extent to which the brain uses recursive networks for many tasks.

At some point, critical mass would be reached. Enough people would experience “I”—though perhaps only sporadically—to build a culture around it. This would create a steep fitness gradient for those who can participate in recursive culture. In other words, the less-recursive folks died or had fewer kids. Consider all the ways, over many thousands of years:

Language becomes recursive, with it, the jokes around the campfire, instructions on making an ax, and the endless small-tribe gossip.

Shamanism and the entire spiritual plane are only appreciated by those who can experience duality.

More sophisticated deceit requires recursion. With duality, one must learn to wear a mask. Everyone else is a sitting duck to the social technology of saying one thing and meaning another.

Recursion changes one’s relationship with time, allowing more flexible planning for the future. This is expressed in language with past and future tenses, further complicating grammar.

Music and dance use recursive structures.

This selection process could have happened fairly quickly. Let’s say “seamless construction of self” is about as heritable as schizophrenia (~50%) and is correlated r = 0.1 with fitness (surviving number of children). This is fairly conservative, as nowadays people with schizophrenia only have about 50% as many children (a huge fitness penalty). The law of the Paleolithic jungle may have been even harsher on those with a fractured grip on reality.

Plugging those conservative parameters into the Breeder Equation, recursive ability (uninterrupted function and acquisition at younger ages) could increase one standard deviation every 20 generations or ~500 years.7 Here is how far recursion would shift in a population over 2,000 or 5,000 years:

In 2,000 years, there is almost no overlap between the populations. By comparison, this is about the same as the difference in height between 8- and 12-year-old boys. By 5,000 years, there is no overlap. Those are cognitively distinct populations. Now consider 20,000:

There are a lot of assumptions that go into a model like this, but the primary one that needs to hold is consistent selection for recursion. That is, those with a seamless construction of self need to have ever slightly more children than those who do not. That seems perfectly reasonable. A correlation of r = 0.1 is barely perceptible in real life, and there are advantages to recursive thinking. Remember, Dawkins said the emergence of memes is one of two great evolutionary moments. Only recursive thinkers could access that great well of knowledge. The ability to understand recursive culture is completely overpowered as a strategy to pass on one’s genes. In the last 50,000 years, we have used this tool to conquer the world, driving many species to extinction and enjoying exponential population growth along the way. Who was having more kids then? Those who were marginally better at recursion. It’s hard to imagine a scenario where there wasn’t selection in that time period.

So, when I say “evolutionary time scales,” I mean long enough to have no overlap between the recursive abilities of two populations. Long enough to be cognitively foreign. Tribes where men develop consciousness as infants vs. during puberty or have schizophrenia rates of 1% vs. 10%. It turns out that may be as short as a few thousand years. And this estimate is well within the bounds of mainstream answers. In fact, Noam Chomsky says it took just one generation.

Chomsky and another linguist named Andrey Vyshedskiy have both proposed theories where a single mutation 50-100 kya enabled recursion, and we are all descendants of that fortunate forbear. This solves the question of degrees (it was a single gene, like a light switch) and scraps the Valley of Insanity. It is also almost certainly wrong. Recursive functions are liable to be unstable, so it would be a great surprise if that were worked out in one fell swoop. Thousands of genes influence schizophrenia and language ability. The evolution of inner life must implicate just as many. Moreover, we have now sequenced the genes of millions of people, including hundreds of prehistoric humans. In the words of population geneticist David Reich, if there was a “single critical genetic change,” it is “running out of places to hide.” Much of what I write is speculative, but I don’t see a way to get around the transition to sapience being very psychologically strange. There must have been a time when inner life was far more fractured. Once recursion started to evolve, it was such a competitive advantage that it would have made up for any fitness loss, including unpleasant side effects like demonic possession and cluster headaches, which would have been passed along with it. Often, human self-domestication is discussed in terms of becoming more pro-social and feminine. Yes, but I think the steepest selection gradient must have been for the “seamless construction of self.” A cleanly delineated “I” and feeling as though one is in control of one’s body. And for this to develop young.

This is a model of what was evolving, now let’s consider the when. Below is a timeline that will be familiar to most, put together by an Evolutionary Anthropologist for The Conversation:

It acknowledges that the evidence for human behavior really only starts around 65 kya with the “Great Leap.” However, art, language, music, marriage, and storytelling are projected back to 300 kya. The reasoning is that these are cultural universals among living humans, so they must go back to our genetic root. Branches of humanity have been separated for 300,000 years, so art must go back at least to then. As the article puts it:

“We inherited our humanity from peoples in southern Africa 300,000 years ago. The alternative – that everyone, everywhere coincidentally became fully human in the same way at the same time, starting 65,000 years ago – isn’t impossible, but a single origin is more likely.”

This is simply not true, given culture can spread. If recursive culture diffused at the cusp of sapience, it would change the fitness landscape wherever it went. Non-recursive or semi-recursive people could have evolved into the memetic niche in the subsequent thousands of years. It’s also wrong about genetic isolation. Humanity’s most recent common ancestor—the most recent person everyone alive is related to—is much more recent than 300 kya. Scientific American cites studies that estimate this to be just 2-7 kya. Genes get around. If there were genes important for recursion, they could have spread starting 50 kya. In the footnote, I go into other problems with the 300 kya date.8 But I don’t want to spend too much time on it. The purpose of this essay is to separate our cultural and genetic roots. Recursive culture could spread and then cause selection for modern human cognition. In theory, this could happen even without genetic contact between groups.

This anthropologist’s framing embeds another common assumption: humans must have been fully human from the moment there is evidence of art or other modern behavior. But the point I have been trying to hammer home is that it would have taken time for recursion to evolve. The first person to think “I am” was not like us. Nor were the first artists 40 kya. If we were to establish a trans-temporal adoption agency, children from 40 kya would not grow up to be lawyers, doctors, or engineers. They would be conscious, but in a modern city would very well end up institutionalized. Evolving something as subtle as a Third Eye takes time.

The best way to understand when humans came online is to look at the archeological record, and for evidence of natural selection in our genomes. Starting with archeology, the date 65 kya used in the graphic is quite generous. The most impressive art from this period looks like:

This is not very good evidence of recursive thinking. I would be surprised if a magpie made that, but it would not be the most clever thing I’ve seen an animal do. It doesn’t require a notion of the self, the future, or fiction. Compare that to the Venus figurines produced from Europe to Siberia starting 40 kya:

There are many interpretations of Venus statues, all of which require recursion. Most poignantly, these may have been self-portraits, which is about the art one would expect to be produced with the discovery of “I.” Moreover, at about this time, all over the world, one can find sure examples of recursion. As mentioned earlier, counting requires recursion. The oldest tally stick dates back 44 kya in South Africa. Notably, there are 28 notches, and it has been suggested that this was made by a woman to keep track of her menstrual cycle, though it could also have been the lunar cycle. Tracking these cycles is one of the first technologies one would expect to develop with the discovery of subjective time. Finally, Indonesia is home to the oldest known narrative art, a cave painting dated 45 kya. Like other early examples of recursion, there is a connection to women. Much of the earliest cave art is of hand prints. The digit ratios indicate that women left three-quarters of these.

These artifacts tick all the recursive boxes: counting, art, storytelling, and interest in selfhood, duality, and time. In the literature, this transition is referred to as Behavioral Modernity. The idea that our minds took their now-current form 40-20 kya was dominant up until the 1990s. For example, the current curator of paleoanthropology at the Harvard Peabody Museum wrote:

“The time between 50,000 and 30,000 years ago saw the spread of modern man out of his hypothetical "Garden of Eden" until, through a process of swamping and replacing older and more archaic subspecies of H. sapiens, he inherited the earth.” ~ The Ascent of Man, David Pilbeam

Ascent was written in 1972 and reprinted in 1991, not all that long ago. As recently as 2009, psychologist Frederick L. Coolidge and anthropologist Thomas Wynn wrote: “The most parsimonious interpretation is that modern executive functions did not emerge much earlier than 32,000 years ago.”9

However, in the last several decades, evidence of deep genetic splits within Africa has complicated this view, and more inclusive definitions of humanity (sometimes without recursive language) have become popular. But again, one of the advantages of EToC is that it allows for separate genetic and memetic roots for humanity. Demonstrating deep genetic splits does not mean that someone 300,000 years ago had the genetic endowment for recursion, much less stable recursion.

Most readers are probably aware of the transition to Behavioral Modernity 40-50 kya. Less well-known is that transition was a process. The cultural level reached in Eurasia 40 kya was not achieved worldwide until much later. For example, a 2005 paper argues that hallmarks of the symbolic revolution are only evidenced in Australia in the last 7,000 years.10 Further, before the Holocene (12 kya), the Australian cultural toolkit most closely resembles Europe and Africa in the Lower and Middle Paleolithic (3,300-300 kya and 300-30kya, respectively). In other words, the tools were millions of years out of date. In evolutionary time, this was before Homo Sapiens or Neanderthals, or Denisovans even existed. Homo Erectus called, and he wants his stone tools back.11 (It’s important to note that the authors use this to argue against Behavioral Modernity. They don’t think it represents a significant evolutionary change, given its recency.)

More broadly, the archeologist Colin Renfrew proposed the Sapient Paradox, which asks: If humans have been cognitively modern for 50k, 100k, or 300k years, why isn’t modern behavior widespread until roughly the end of the Ice Age? According to Renfrew, “From a distance and to the non-specialist anthropologist, the Sedentary Revolution [12 kya] looks like the true Human Revolution.”

He is not alone. Michael Corballis presents two possible periods for the evolution of recursion: 400-200 kya and 40-10 kya.12 Just last year, linguist George Poulos argued that “language, as we know it today, probably began to emerge about 20,000 years ago.” Likewise, linguists Antonio Benítez-Burraco and Ljiljana Progovac propose a four-stage model for the evolution of language, with recursion only present in the last 10,000 years. By their analysis, it was not until then that humans demonstrated behavior complex enough to need recursive language. In addition to social complexity, they point to the global shift to a more globular skull shape in the last 35,000 years. It’s a quirk of the term “Anatomically Modern Human.” This is applied to humans 200 kya, but it doesn’t mean “could pass for a modern human.” It means “has features like a gracile skeleton and a reduced brow ridge that are now common to humans and distinguish us from extinct members of the genus Homo.” Our skulls are not the same as even 50 kya!

If there were a rewiring of the brain for recursion in the last 50 kya, then one would expect:

Changes in skull shape

Lots of new genetic mutations related to cognition

As previously mentioned, skulls were becoming more feminine and globular in this period. Turning to the second question, the paper Genetic timeline of human brain and cognitive traits is instructive. Below is a plot of new genes entering the gene pool. Notice the surge peaking 30 kya, many of which are cognitive genes. Like the archeological record, not much is going on 200 kya. When does it look like we entered a new niche and encountered new problems that required new genetic material to solve?

The authors highlight that “genes containing recent evolutionary modifications (from around 54,000 to 4,000 years ago) are linked to intelligence (P = 2 x 10-6) and neocortical surface area (P = 6.7 x 10-4), and that these genes tend to be highly expressed in cortical areas involved in language and speech (pars triangularis, P = 6.2 x 10-4).”

A 2022 paper found signals of strong selection for brain and behavior-related genes in the last 45 kya, as well as from 80-45 kya. They suggest these are adaptations to handle the relatively colder environment of Saudi Arabia as humans left Africa:

“While neurological functions initially appear surprising, it is possible that this observation mostly relates to the critical role the nervous system and brain play in coordinating, integrating, and subsequently regulating diverse physiological processes, which are impacted by cold environments.”

One wonders if Saudi Arabia was cold enough to cause such significant changes in brain function.13 They also mention that, “provocatively,” it could indicate cognitive evolution for sociality.14 (And I would add recursion, which is first evidenced around this time.)

So, the weak version of EToC is that recursive culture spread and changed the fitness landscape. Recursion was not always evenly distributed over the world, but a rough timeline is that there were moments of duality 50-100 kya15, and recursion was “put to work” 50 kya when it was psychologically integrated enough to begin building culture around recursive skills. Given recursion is found all across the world soon after, this culture must have spread. At that time, “I” was not necessarily a constant, uninterrupted fixture. Individual’s relationship with their inner voice could have been wildly different. At some point, perhaps fairly recently, the seamless construction of self became the norm worldwide, and disruptions are now pathologized.

The bulk of selection would have been in the last 50,000 years. How could there have been selection for symbolic thinking before there was symbolic culture? Selection would have happened little by little at first. It must have started with barely recursive humans producing barely recursive culture. Perhaps this was a stable equilibrium for some time, given all of the problems recursion causes. At a certain point of cultural complexity, recursive thinking would become table stakes, creating a steep selection gradient.

The question of what makes us human goes back thousands of years. Most of what I present is a review. From the many competing theories I chose to emphasize recursion (rather than, say, symbolic thought) because it is a natural way to show that the human transition was both practical and phenomenological. We evolved a soul, and that allowed us to conquer the world. Most accounts of self-domestication emphasize getting along and non-aggression, the difference between wolves and dogs. For humans, however, the change has been categorical. Homo Sapiens means “Thinking Man.” Non-recursive humans were not sapient. Their relationship with time and the material world is as different from ours as water is from ice. Humans are absolutely singular as a species. This isn’t really a contribution, as it’s been said for thousands of years. My contribution is how I think this transition could have played out over evolutionary time scales. Also noteworthy is that my (very incomplete) model of what makes humans special is not required for the rest. If you don’t agree with recursion, substitute “symbolic thinking,” “language,” “higher-order thinking,” or your preferred definition of “soul.” The only real requirement is that it is a phase change.

Many researchers think something biological changed ~50 kya, and language (or recursion) is on the shortlist. Where I diverge somewhat is the emphasis on the gene-culture interaction and how long the process could have taken. Even if there were moments of sapience 50 kya, fully modern psychology could have been obtained much later. The weak version of EToC posits that “recursive culture” spread in the last 50,000 years. To defend that idea, it’s easiest to say exactly what that was. The strong version of EToC holds that women, by and large, first understood “I am.” Later, they developed psychedelic snake rituals to aid in that epiphany, and those rituals spread. The male perspective is preserved in many creation myths, including Genesis. This is why I emphasized the phenomenological implications of recursion. Stories worth retelling concern changes in consciousness, not technology. Therefore, if recursion evolved within the reach of oral tradition, what would be passed down with the most reverence are the changes to do with time, self-awareness, agency, and duality. Not utilitarian concerns of more complex grammar or stone tools (though their introduction is also remembered).

The Snake Cult of Consciousness

“And the serpent said unto the woman, Ye shall not surely die. For God doth know that in the day ye eat thereof, then your eyes shall be opened, and ye shall be as gods, knowing good and evil.” Genesis 3:5

The expulsion of Adam and Eve was the result of natural law, not a capricious god doling out contradictory commandments. Once Eve perceived herself as an agent and the voice in her head as her own, she could no longer dwell in blissful ignorance. She became responsible for her actions and aware of her mortality. Others have offered this interpretation. Julian Jaynes even related it to identifying with the inner voice. But what’s with the snake?

If you took a time machine to visit humans at the cusp of recursion, could you teach them about “I”? What would you try? I would embed “I” into a horror ritual. An escape room where the only way out was in. This would pull on many biological levers, including psychedelics, given their ability to help change one’s mind. The acute effects include opening the mind and enabling novel thoughts. Particularly affected are functions related to introspection and consciousness.

It’s a bit odd that a class of drugs known most for ego death could be involved with ego birth. But the proposed mechanism is more a “brain reset” during which an initiate has many new ideas—hopefully including “I am”—followed by weeks of increased brain plasticity where those can be integrated. This is not my idea. Terence McKenna proposed the Stoned Ape Theory in his book Food of the Gods: The Search for the Original Tree of Knowledge.

For McKenna, the relationship between consciousness and psychedelics was practical. When he tripped, he saw consciousness being constructed in his mind. One of his most eloquent expositions is on entities he described as self-transforming machine elves—fantastical creatures made of language. “I don't know why there should be an invisible syntactical intelligence giving language lessons in hyperspace. That certainly, consistently, seems to be what is happening.” Based on his schooling in hyperspace, he figured that language was fundamental to consciousness and that psychedelics could help in obtaining it.

McKenna argued the Food of the Gods was psilocybin mushrooms. But they only play a minor role in religious history. In fact, a much better candidate is in Genesis itself: snakes.16 Their venom is a psychedelic that contains large amounts of nerve growth factor. Not only that but they are worshiped as consciousness-granting globally and have been over evolutionary time scales. I propose the original Fruit of Knowledge was snake venom.

This section starts with a chemical investigation and then moves backward in time, from modern snake venom rituals to antiquity to the Stone Age.

Snake Venom as Entheogen

"Venom worked out for me very well a long time ago. It took my life away, but it gave me something more precious than life." ~Sadhguru, “Why I drank snake venom”

In the 1970s, the classicist Carl Ruck coined the term entheogen (literally, “god within”) to refer to hallucinogens used to induce altered states of consciousness. Importantly, this is not just any alteration; they must be used to access the “divine within.” Most cultures seem to have at least one entheogen of choice, be that opium, cannabis, coca-leaves, iboga root, salvia, ayahuasca, psilocybin mushrooms, betel nut, acacia, or bufo toad, to name a few. Often left off this list is snake venom. A glaring hole in the literature I aim to address here.

The first question is chemical. Can snake venom function as an entheogen? There are a few papers—enough to merit a review article—on snake venom as a recreational drug. The case reports are similar to those of psilocybin mushrooms. In one, the venom is intravenously delivered from fang to tongue by the neighborhood snake charmer. With a single dose, the patient reports changing long-ingrained behaviors. Following a decade of dependence on alcohol and opiates, he gave both up cold turkey after one kiss of the cobra. Vice also has a mini-doc about the phenomenon in India and a one-off case in the UK.

Psychedelics stimulate the production of Nerve Growth Factor (NGF), which allows for greater brain plasticity. Therefore, if you want to Change Your Mind, as Michael Pollen puts it, then psychedelics are an excellent tool. The medical field is currently in a psychedelic boom where these drugs are being tested to treat pretty much any psychiatric issue.

Snake venom does more than just stimulate the production of NGF; it brings its own to the party. In the 1950s, laboratories sourced NGF from brain tumors in mice. When snake venom was used to process the tumors, the resulting NGF was much more effective. Upon investigation, the researchers found that snake venom by itself contains NGF 3,000-6,000 times as potent as that derived from tumors, the previous best source.

The induced plasticity is not just acute, nor solely to do with NGF. One recent paper argues that snake venom could be a cornerstone of treatment in neurodegenerative diseases: “a component of the Indian Cobra N. naja17 venom with no significant similarity to nerve growth factor, is shown to induce sustained neuritogenesis [growth of connections between neurons].” It is currently being researched to treat Alzheimer’s Disease and depression. This is heartening, but modern medicine is young yet. Most of the potential remains unknown. One recent paper put it: “snake venoms can be considered as mini-drug libraries in which each drug is pharmacologically active. However, less than 0.01% of these toxins have been identified and characterized.”

There is the question of delivery. I’m not sure if NGF is bioavailable when injected on the tongue or taken orally mixed with milk, as Sadhguru did. This paper finds the tongue is an excellent location to pass the blood-brain barrier (even without injection). But there is more than one way to skin a cat and even more to puff the magic dragon. Classicist David Hillman suggests snake venom concoctions were administered as anal suppositories at Greek temples. Delivery does not seem to be a limiting factor.

The final criterion is whether venom is ritualized in a spiritual setting. There are dozens of YouTube videos of the Hindu guru Sadhguru discussing why he drank snake venom. In his words:

“Venom has a significant impact on one’s perception if you know how to make use of it…It brings a separation between you and your body… It is dangerous because it may separate you for good.” The Unknown Secret of how Venom works on your body [practical experience]

Venom’s spiritual use is not limited to one guru. The next sections will look at the mythological and archeological evidence around the world. This is organized in an ever-expanding radius: proto-Indo-European, Eurasia and America, and then worldwide.

Proto Indo European

“Serpents were milked to access their venom as psychoactive toxins, both to serve as arrow poisons, but also as unguents in sub-lethal dosages to access sacred states of ecstasy.” ~Carl Ruck, The Myth of the Lernaean Hydra

The Romans copy-pasted as much of Greek culture as they could. Looking back at that effort, the Roman orator Cicero waxed eloquent about the Eleusinian Mysteries:

“For it appears to me that among the many exceptional and divine things your Athens has produced and contributed to human life, nothing is better than those Mysteries. For by means of them we have been transformed from a rough and savage way of life to the state of humanity, and have been civilized. Just as they are called initiations, so in actual fact we have learned from them the fundamentals of life, and have grasped the basis not only for living with joy, but also for dying with a better hope.” M. Tullius Cicero, De Legibus, ed. Georges de Plinval, Book 2.14.36

The Eleusinian Mysteries were the quintessential Greek celebration of death and rebirth. They told the story of Persephone’s abduction to the underworld and the grief-stricken search by her mother, Demeter, for her return. At the heart of this tale was said to be the secret of life. Or, in the words of the Greek poet Pindar, “Blessed is he who, having seen these rites, goes beneath the hollow earth; for he knows the end of life, and he knows its god-sent beginning.” What was revealed about the beginning of life is, well, a mystery. It was a mystery cult, and the punishment for revealing their secrets was death. But even more, words do not seem adequate for the task. Homer, not one to be at a loss for words, nevertheless demurs in his description: “Great awe of the gods makes the voice falter.” What happened at Eleusis had to be experienced to be understood.

Still, there are some hints. In 1978 Ruck’s The Road to Eleusis scandalized the field of classics by making a case that the core of the initiation was psychedelic. Brian Muraresku revisited that argument in his recent best-seller The Immortality Key: The Secret History of the Religion with No Name. (Check out Sam Harris’s interview.) Muraresku interprets the promise “If you die before you die, you won’t die when you die” as a reference to ego death induced by fungus. As an engineer, I think of these possibilities in mechanical terms. Psychedelics are a powerful cognitive tool. It’s not surprising that the most powerful religious technology enlisted their charms. Still, there are better candidates for the entheogen at Eleusis.

In the second century AD, the emperor Marcus Aurelius was initiated into the Mysteries. He is reportedly the only lay person ever allowed inside the holy of holies within the main temple. As emperor, he rebuilt the temple after it was nearly destroyed by the barbarian Kostovoks in AD 170. His bust rests in the courtyard, with a snake emblazoned on his chest.

Aeschylus, the father of Greek tragedy, was also an initiate, and many of his plays dealt with the Mysteries. In one, he seems to have flown too close to the sun and was almost executed for revealing too much. Consider Hillman’s interpretation.

“Before ancient seers summoned spirits like Allecto from the underworld—an arcane practice known as necromancy—they invoked Bacchus, the god of ecstatic dance. The worship of this mystery cult divinity, known variously as Dionysus, Bromius, and Zagreus, was connected in classical literature and art with the handling of the European horned viper (Vipera ammodytes)...It appears that the priestesses of Hecate, Priapus, and Demeter/ Persephone were involved in the consumption of viper venom. [Aeschylus was nearly executed for revealing the secrets of the Eleusinian mysteries in his plays on Orestes. One of these plays (The Cup-bearers) contains a dream of a “dragoness” in which her breast milk is injected with the venom of a snake.]...Some of them are even called “dragonesses,” and are involved with the “burning off” of human mortality.”

The playwright Aristophanes also profaned the mysteries, which Hillman connects to snake venom in another paper that discusses the dragonesses/drakaina:

“The Drakaina, or δρακαινα , was said to bring about her powers by mixing/preparing drugs in potable or consumable form. There are numerous named Drakainai, among the most famous being Clytemnestra. Aristophanes was accused of profaning (revealing) the Mysteries in his trilogy on Orestes. In his Libation Bearers, Aeschylus records that the Drakaina produced and administered a mixture of blood and milk after being bitten in the breast by a snake/dragon (514 ff.) Orestes even describes his own “snakeification” or transformation into the Dragon. (Line 549: ἐκδρακοντωθεὶς δ᾽ ἐγὼ, “I myself too have brought out the dragon.”)

…

Dragon priestesses entered an ecstatic or Bacchic state of mania, during which they claimed to experience and influence transdimensional forces or “daimones” (demons) by manipulating “waves” of timespace distortions produced by “darkened stars.” The Greeks used the word ἔνθεος [entheos, literally “god within”] to describe this strange star-induced ecstasy, and mystics claimed it was part of a process of manifesting the “god within the breast” of the initiate.”

This was the subject of his dissertation. He explicitly states that the Mysteries used snake venom as an entheogen, and he provides dozens of primary references.18 Still, it’s nice to have a second opinion, this one about the oracle at Delphi (where the high priestess was called the Pythoness):

“The Sioux believed that if a young, dancing man was bitten by a snake and didn’t die, he would experience a universal awakening. (Since ancient times, dancing was felt to be an ecstatic mimesis of the mystical and is still seen as the means by which mysteries are disclosed.) Snake venom was lapped to induce trances at Delphi. Even some scientists testify to experiencing altered states from snakebite, seeing visions and feeling enormous capabilities.” Drake Stutesman, Snake, 2005

We will return to snake dances. For now, the fact that Ancient Greeks used venom as an entheogen appears to be common knowledge among at least a subfield of classicists.19 At least it is well-documented that various temples housed snakes.20 And though it may be a salacious rumor, Clemens, an Egyptian pagan philosopher who converted to Christianity circa 200 AD, wrote that the Mysteries included snake orgies dedicated to Eve.21 At any rate, given the Mysteries extend back to prehistory, it is prudent to look to sister civilizations for corroborating evidence that snake venom was used as an entheogen.

In the 1580s, the Florentine merchant Filippo Sassetti noted that the Sanskrit words for God, serpent (!), and some numbers sounded like his native Italian. So began the Proto-Indo-European (PIE) hypothesis: India and Europe were part of the same cultural stock, sharing a root deep in the past. In the subsequent centuries, no prehistoric group has been the subject of more linguistic and archeological interest. The PIE people are thought to have lived 6-9,000 years ago somewhere around the Black Sea. Their descendants have spread over much of Eurasia, taking their language, religion, and customs with them. Today 46% of the world’s population is native in a PIE language.

Having established the connection, we turn from Greece to India to triangulate venom use among the PIEs. There, the potion of enlightenment is called Soma, and it, too, has mythic associations with snakes and milk.

“Snakes (often symbolizing women) perform an alchemy that women perform by turning blood into milk. In the village ritual, milk is fed to a snake; the snake then turns this into poison, which in turn is rendered harmless by Soma (or by the shaman, who controls Soma, drugs and snakes). Yogis, the inverse of mothers in terms of fluid hydraulics, drink poison, which they regard as Soma, and thus have power over snakes. A yogi can also drink poison and turn it into seed, and he can turn his own seed into Soma by activating the (poisonous?) coiled serpent goddess Kundalini” Karma and Rebirth in Classical Indian Traditions, Wendy Doniger O'Flaherty, 1980, page 54.

Once again, snake venom and milk are symbolically mixed up with life itself. This theme pervades Indian religion. After obtaining enlightenment, the Buddha is sheltered from a storm by the Naga king. And Naga enlightenment is not a dead religion. The venom-drinking Sadhguru has millions of followers and a penchant for resurrecting esoteric rituals involving snakes. There are many videos of him on YouTube discussing the ritual significance in perfect entheogenic language. And, like Aeschylus, he combines snake venom with milk. I don’t think anyone has argued for a PIE tradition of snake venom Soma, even though it seems to be well documented in sacred rites in Greece and India. (Or, as we’ll discuss later, Soma could have been the antivenom consumed before the encounter with a snake.)

But my claim is not about the Proto-Indo-Europeans. It is about the world. And it is not about ego death in Ancient Greece but the evolutionary origins of ego. We have to go deeper.

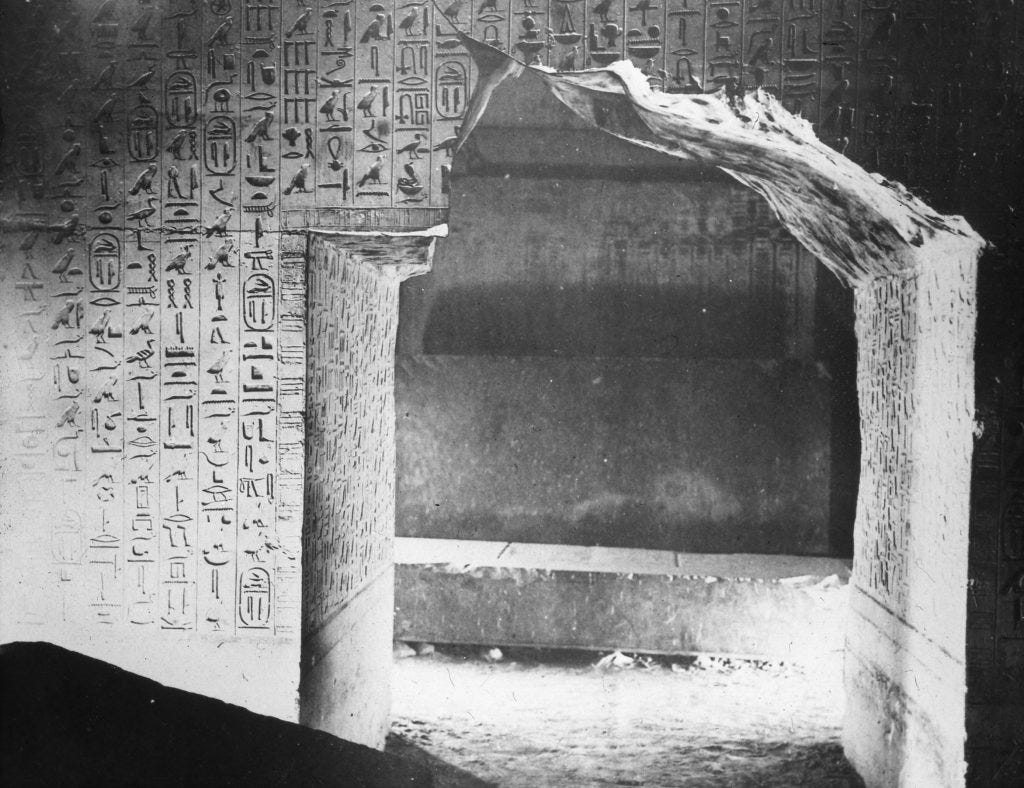

Eurasia and the Americas

The Pyramid Texts are among the earliest written religious texts in the world, dating back to Egypt's Old Kingdom, around 4.5 kya. They consist of a vast body of spells, prayers, and incantations inscribed on the walls and sarcophagi of the pyramids at Saqqara, intended to protect and guide the pharaohs in the afterlife. The Pyramid Texts offer invaluable insights into ancient Egyptian beliefs regarding the cosmos, creation, and the gods.

Like many other traditions, Egyptians held that there was only chaos in the beginning, often represented as an ocean. Earlier, I referenced an Egyptian tradition where Atum emerged from the ocean by saying his name. This passage reflects another tradition where the first being is Neheb-ka:

“I am the outflow of the Primeval Flood, he who emerged from the waters. I am the "Provider of Attributes” serpent with its many coils. I am the Scribe of the Divine Book, which says what has been and effects what is yet to be.” ~Pyramid Text 1146, Egypt 2,500 BC

Neheb-ka is translated as “Provider of Attributes”, though it could also be “That which gives Ka” or “He who harnesses/yokes the Ka,” where Ka is spirit, soul, or double. So there it is, carved in stone; souls were originally harnessed by a snake. By them, man was made double. Or at least the Egyptians thought so.

Figure 38 below, taken from Robert Clark’s Myth and Symbol in Ancient Egypt, shows that idea lived on until the 12th century BC during Rameses VI’s reign, where Time and Form are shown to emerge from the Cosmic Serpent. (Remember, the experience of time directly results from recursion.)

In ancient Egypt, wisdom and perception were often symbolized or even bestowed by snakes. For example, Neheb-ka gives the Eye of Ra22 to the Pharoah:

But back to the matter at hand, establishing a common root between American and Eurasian snake myths. Phylogenetic relationships between Greece and India are to be expected, given they both hail from PIE culture 6-9 kya. Going deeper in time meets many methodological issues. However, snake myths are a rare exception where much larger and deeper phylogenies are accepted. How does one square the Egyptian, Hebrew, and PIE conception of life-giving snakes with that of the Chinese, for example? Below is the creator goddess Nuwa with her consort Fuxi, intertwined as serpents:

In the 1880s, Miss A. W. Buckland noted the similarities of serpent worship on either side of the Bering Strait and argued that it, along with sun worship, agriculture, weaving, pottery, and metalwork, spread from Eurasia to the New World with the earliest settlers. Over a hundred years later, comparative mythologist Michael Witzel echoes the claims about snakes. He argues that a creation cosmogony was developed in Eurasia from 40-15 kya, which then spread to the New World. Drawing from traditional cultures in China, Hawaii, Meso-America, Egypt, Greece, England, Japan, Persian, and India, he proposes the proto-creation myth included the slaying of a great dragon, often with the help of a “heavenly drink” such as Soma. After completing this deed, humans were given (or stole) culture: “It is only after the earth has been fertilized by the Dragon’s blood that it can support life.”23

His methods are comparative; extant myths are so similar in Japan, Greece, and Mexico that they must share an ancient root. To see what he meant, let’s look back to prehistoric examples with an eye toward entheogenic use.

In Texas circa 500 AD, a man ate a rattlesnake, fangs, scales, and all. We know this because archeologists found and analyzed his poop (er… coprolite). This is interpreted as being part of a ritual because people usually don’t eat fangs or the rattle. Further, the creation stories in this region are teeming with horned or feathered serpents, a tradition that seems to go back thousands of years based on cave art. For example, see the Serpent Cave in Baja California, dated 7.5kya. It features an eight-meter-long mural:

The Bradshaw Foundation adds: “The motifs shown on the site's rock art are associated with concepts that refer to creation myths; death and the cyclical renewal of life and the seasons. The central figure of the horned serpent is present throughout the American continent and prevails in the worldview of several native cultures.” Aspects survived 7,000 years to Aztec times:

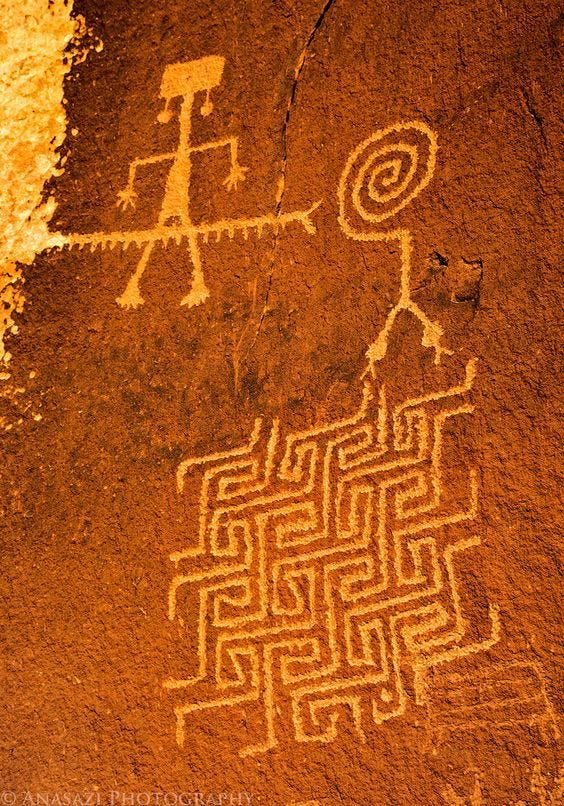

Or, consider rock art found in what is now Utah and Colorado. This snake shaman on the Colorado Plateau is dated24 5-9 kya:

Or these from 1.5-4 kya from the San Rafael Swell in Utah:

Or this from the Buckhorn Wash panel in Utah:

Okay, one more:

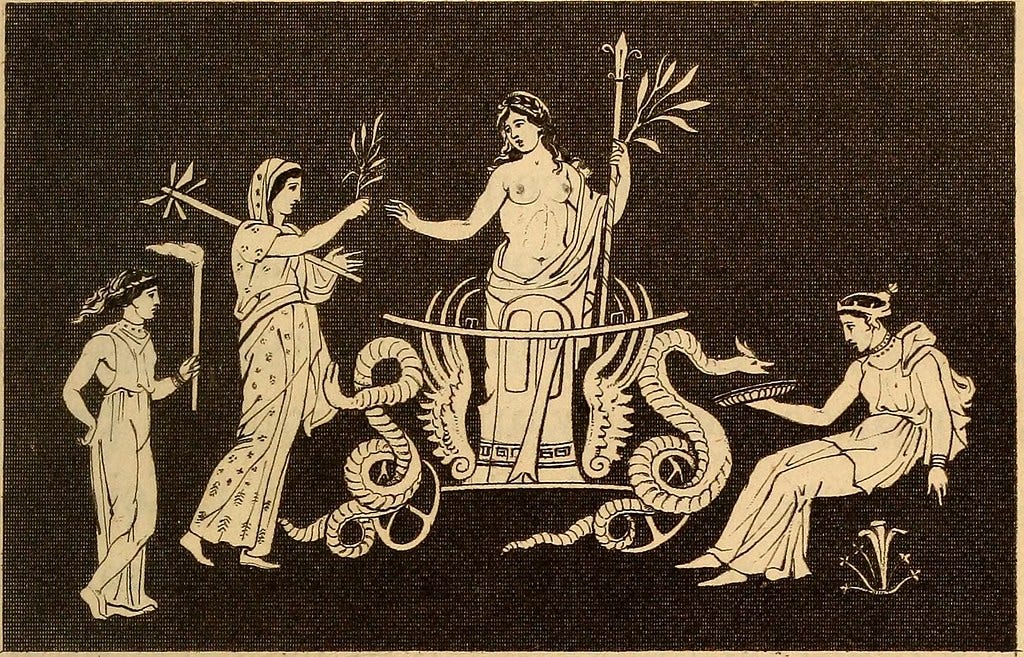

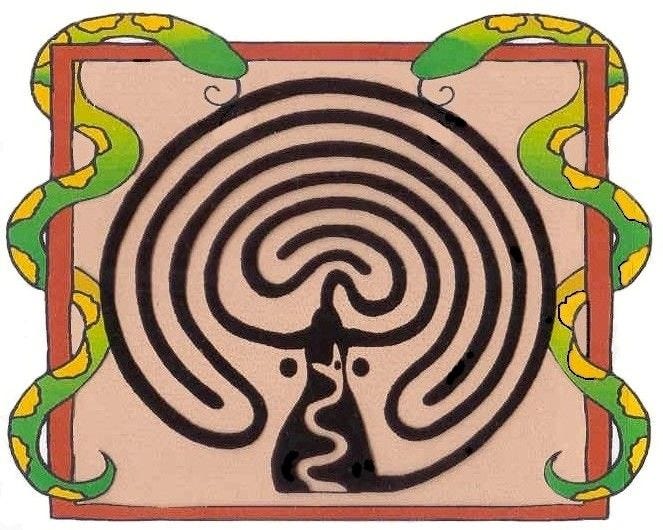

Psychedelic, right? The last image is particularly interesting. What better way to represent a venom-induced trip than a spiraling two-headed serpent entering a maze? The same symbols are common in Eurasia, where the labyrinth has long been used as a metaphor for inner discovery. Here, for example, adopted by a New Age artist:

In Egypt, Nehebkau, Provider of Doubles, is sometimes depicted with two heads. Once again, from Clark:

I’m not necessarily suggesting a phylogenetic relation between mazes or two-headed snakes in the New and Old World. Employing venom as an entheogen could cause these metaphors to be naturally reinvented. I contend the hallucinogenic practice that produced them spread with the original snake cult. It’s also worth noting that such a practice could evolve as less dangerous entheogens were found (particularly in the Americas), and the snake connection would only live on in legend. Peyote was used in rituals as early as 6 kya, for example.

The paper that analyzed Texas coprolite was published in 2019 and claimed to be the first to discuss archeological evidence of the ritual digestion of a venomous snake. The next year, a paper published in Nature analyzed a collection of snake bones found in an Israeli cave, deposited 15-12 kya. They do not discuss ritual but do observe that the venomous snakes were more likely to be digested than the non-venomous ones.

These Near Eastern snake-eaters are Natufians who genetically cluster with the much later Egyptians. They are a culturally important group that presaged the coming Agricultural Revolution with their own revolutions. The Broad spectrum revolution (exploiting a wider range of food sources, including smaller animals such as fish, snakes, and rabbits) is hypothesized to have allowed the Natufians to adopt a sedentary lifestyle. Staying put, in turn, led to agriculture.

Archeologist Jacques Cauvin argues that the beginnings of agriculture were not so mundane; they marked a change in human consciousness. The Natufians and others in the Near East experienced a “revolution of symbols,” a conceptual shift that allowed humans to imagine gods—supernatural beings resembling humans—that existed in a universe beyond the physical world. It’s worth emphasizing that for Cauvin, this is a cultural, not neurological, change.

His ideas have been given new life by the excavation of Göbekli Tepe, the world’s oldest temple. Klaus Schmidt oversaw the excavation for two decades and said that Cauvin’s central point was proven right: religion preceded agriculture.25 The EToC reading is that recursion was becoming more natural, and along with it, duality and thinking about the future. Agriculture was the result, in part due to the Snake Cult.

It’s difficult to show the entheogenic use of venom 11 kya. However, a full 28.4% of the carved animals at Gobekli Tepe are snakes, double the second most commonly depicted animal, the fox, at 14.8%. And this counts groups of animals as just one occurrence. Snakes, which are often carved in bunches, account for half of all identifiable animals if you break them up as individuals.

Göbekli Tepe is sometimes treated as coming out of nowhere. But from the view of the Snake Cult, it fits nicely into a well-accepted phylogeny of snakes used in rituals of death and rebirth. Snake figurines are a dominant theme in many archeological sites near Göbekli Tepe, including Körtik Tepe, which precedes it by a thousand years. Further north, in a burial in Siberia, a boy was buried 24 kya at the height of the Ice Age. In his burial, we find mammoth ivory carved with snakes that look like cobras. Cobras (or any snakes?) did not live in such a frigid climate. These were likely foreign gods that had traveled with these people. Clearly, they had staying symbolic power. (Similarly, this culture carved many Venus Statues, which were common in Europe at the same time.)

Back in Venus’s motherland, they performed headless snake rituals as far back as 17 kya. Two decapitated snake skeletons were found in a Pyrenees cave decorated with headless bison. Imagine what it would be like to enter that cave by firelight after days of fasting. It would be abundantly clear to an initiate they were about to lose their head. The link above is to an Indo-European specialist who describes it as evidence of Europe’s first dragon ritual.

Finally, though figures are what gets attention, most cave art consists of abstract symbols. There are 20 or so symbols that are found in cave art the world over. These are thought to be a form of proto-writing whose meaning was consistent across time. Of those, serpents and birds are the only two animal forms, with the serpentiform first appearing 30 kya. The snakes at Göbekli Tepe don’t just pop out of nowhere. They are part of a deep and widely shared cultural heritage remembered from Egypt to China to Mexico.

Many scholars, such as Buckland, Witzel, d’Huy, and White, treat serpent myths in the Americas and Eurasia as stemming from the same root deep in the past. Separately, others argue snake venom was used as an entheogen in Greece and India (and tentatively America). I suggest combining these ideas: snakes are associated with creation because their venom served as the entheogen in the original Eurasian religion, which spread to the Americas. Establishing venom as the primordial Soma is a worthy goal. But for this to be a human story, the whole world must be gathered into the Cult.

This is about half of Andrew’s essay; the second half of “The Eve Theory of Consciousness” can be found on his blog.

To bark or not to bark, that is the question—

Whether 'tis nobler in the mind to suffer

The squirrels and the mailmen of outrageous fortune,

Or to raise a leg against a sea of troubles

And by pissing end them